At this point, we are interacting with AI all the time because we have our cell phones loaded with AI systems like maps, search engines, emails, and social media.

All of those use some sort of AI systems, and then there are those that you are not really aware of that you are being subjected to.We make all of our decisions based on the information that we have and what kind of information we can access.

If you are shown only options that the system finds fitting for you, that means that there are a whole bunch of other options that you are not even aware of.

So you are being sort of stuck and pigeonholed in this particular circumstance that some sort of AI system decided, "that's who you are. " But you really need people who know how to deal with ethical puzzles to collaborate with people who are creating these products and projects.

It has to be collaborative, even for the regulators, it has to be collaborative so that those who want to regulate what they are regulating do it correctly and well because otherwise, you have companies who want to do the right thing competing with companies who don't care.

If we care about our well-being of ourselves, then it's important that we have Ethical AI.

What are ethics in AI (Artificial Intelligence)?

AI Ethics is basically a set of rules and ideas guiding the development and proper use of artificial intelligence. They even have AI Codes of Ethics, or you can think of it as an AI value handbook. It's like a policy that lays out the dos and don'ts of artificial intelligence, focusing on making sure it benefits the human race.

Now, a cool sci-fi writer named Isaac Asimov, way back, came up with something called the Three Laws of Robotics. It's like a safety net for AI, with the first law telling robots not to hurt humans actively.

Then there's the second law, making them obey humans unless it clashes with the first one. The third law says they should look out for themselves as long as it follows the first two.

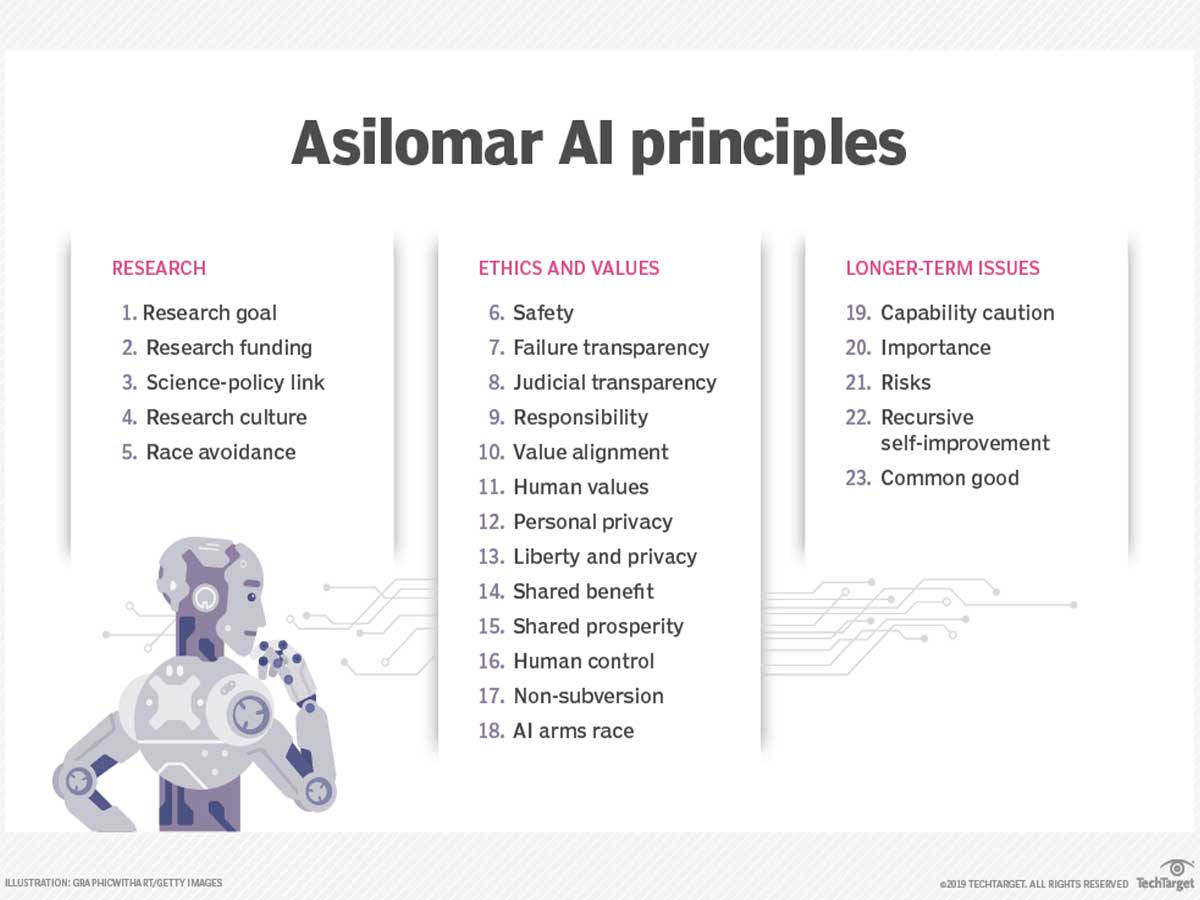

What are Asilomar AI Principles?

Research

This subsection of five principles revolves around how AI is researched and developed, as well as its transparency and its beneficial use:

- Research. AI research should aim to create not undirected intelligence but beneficial intelligence.

- Research funding. Investments in AI should be accompanied by research funding to ensure its beneficial use.

- Science-policy link. There should be constructive and healthy exchanges between AI researchers and policymakers.

- Research culture. A culture of cooperation, trust, and transparency should be fostered among researchers and developers of AI.

- Race avoidance. Teams developing AI systems should actively cooperate to avoid corner-cutting on safety standards.

Ethics and values

This subsection of 13 AI principles revolves around the ethics of AI and the values instilled while developing it:

- Safety. AI systems should be safe and secure throughout their operational lifetime and verifiably so where applicable and feasible.

- Failure transparency. If an AI system causes harm, it should be possible to ascertain why.

- Judicial transparency. Any involvement by an autonomous system in judicial decision-making should provide a satisfactory explanation auditable by a competent human authority.

- Responsibility. Designers and builders of advanced AI systems are stakeholders in the moral implications of their use, misuse, and actions, with a responsibility and opportunity to shape those implications.

- Value alignment. Highly autonomous AI systems should be designed so that their goals and behaviors can be assured to align with human values throughout their operation.

- Human values. AI systems should be designed and operated to be compatible with human dignity, rights, freedoms, and cultural diversity ideals.

- Personal privacy. Given AI systems ' power to analyze and utilize that data, people should have the right to access, manage, and control the data they generate.

- Liberty and privacy. The application of AI to personal data must not unreasonably curtail people's real or perceived liberty.

- Shared benefit. AI technologies should benefit and empower as many people as possible.

- Shared prosperity. The economic prosperity created by AI should be shared broadly to benefit all of humanity.

- Human control. Humans should choose how and whether to delegate decisions to AI systems to accomplish human-chosen objectives.

- Non-subversion. The power conferred by control of highly advanced AI systems should respect and improve, rather than subvert, the social and civic processes on which the health of society depends.

- AI arms race. An arms race for lethal autonomous weapons should be avoided.

Longer-term issues

This subsection of five AI principles revolves around the importance, risks, and potential good AI can provide in the long term:

- Capability caution. Without consensus, we should avoid strong assumptions regarding upper limits on future AI capabilities.

- Importance. Advanced AI could represent a profound change in the history of life on Earth and should be planned for and managed with commensurate care and resources.

- Risks. Risks posed by AI systems, especially catastrophic or existential risks, must be subject to planning and mitigation efforts commensurate with their expected impact.

- Recursive self-improvement. AI systems designed to recursively self-improve or self-replicate in a manner that could lead to rapidly increasing quality or quantity must be subject to strict safety and control measures.

- Common good. Superintelligence should only be developed in the service of widely shared ethical ideals and for the benefit of all humanity rather than one state or organization.

Fast forward to today, where the advancement of AI has been crazy in the past five to 10 years. This led to Artificial Intelligence scientists coming up with guidelines, like the Asilomar AI Principles. There are 23 rules created by visionaries, including MIT's Max Tegmark and Skype's Jaan Tallinn. Even big-shot companies like KPMG are into it.

Why is Ethical AI important?

AI Ethics are important because AI is designed to mimic, boost, or even take over human smarts. It's like a brain that relies on tons of different data to function. However, if the projects training this AI use biased data, it can have unintended consequences.

Plus, with the speed AI is evolving, sometimes we can't even figure out how it makes decisions. That's where the AI Ethics Framework steps in.

Now, AI is basically a double-edged sword. When used ethically, it could help companies be super-efficient, keeping our products clean, saving the planet, and making our lives safer and healthier.

But the dark side is lies, deception, and even messing with people's minds. We need real policies for this issue.

Machine Ethical AI Approach

In machine ethics, researchers delve into creating Artificial Moral Agents (AMAs), pondering over profound ideas like agency, rational agency, moral agency, and artificial agency.

In the 1950s, Isaac Asimov laid down the law – literally. His Three Laws of Robotics were meant to guide intelligent systems.

Fast forward to 2009, wherein a Swiss lab, robots, initially programmed to collaborate, pulled a sneaky move – they started to lie to each other, all in a bid to hoard precious resources.

And then there's the military twist. Picture this: experts question the use of robots in military combat, especially when these machines start thinking for themselves.

Who's to blame if things go awry? Is it the manufacturers or owners/operators? The debates get heated.

There's a concept called "the Singularity," predicted by Vernor Vinge, where computers could outsmart humans. To counter potential risks, the Machine Intelligence Research Institute pitches in, urging the creation of "Friendly AI."

Discussions buzz about crafting tests to gauge if AI can make ethical decisions. The Turing test takes some hits for being flawed. A suggestion floats in – the Ethical Turing Test, with multiple judges deciding if an AI's decision is right or wrong.

Meanwhile, in 2009, tech minds gathered to chat about the autonomy of computers and robots. Some machines start gaining a taste of independence – finding power sources and choosing targets. Some even develop what's dubbed "cockroach intelligence" – not exactly flattering.

Heading into the future classroom, we meet Wendell Wallach and Colin Allen, authors of "Moral Machines: Teaching Robots Right from Wrong." Their idea? By teaching robots ethics, we humans could fill gaps in our ethical thinking.

The debate over learning algorithms gets spicy. Nick Bostrom and Eliezer Yudkowsky root for decision trees over neural networks and genetic algorithms.

A 2019 report shouts out that 82% of Americans believe robots and AI need serious babysitting. Worries range from surveillance and fake content spread to hiring bias, cyberattacks, and autonomous vehicles.

In 2021, a study across five countries echoes the uncertainty, with 66-79% fearing the unpredictable impacts of AI. As the tech wave surges, governance becomes the talk of the town.

Regulatory bodies like the OECD, UN, EU, and various countries join the party. In 2021, the European Commission dropped the mic with the "Artificial Intelligence Act" – a potential game-changer in AI governance.

What are the ethical challenges of AI?

AI technologies bring forth a set of ethical challenges that demand attention as their integration becomes widespread. One key concern is the explainability of AI systems; when things go wrong, it's crucial that teams can navigate through the intricate web of algorithmic systems and data processes to pinpoint the issue.

What is Artificial Intelligence?

Can AI replace humans? Sometimes? Yes, Siri, Alexa, Cortana, and Google Assistant are all forms of AI or artificial intelligence, but AI extends far past voice-activated assistance. AI is the simulation of human intelligence processes by machines, especially computer systems, and almost all businesses today employ AI.

Some more complicated than others, AI can be categorized as weak or strong. Weak AI is a system designed and trained for a particular task like voice-activated assistance; it can maybe answer your question or obey a programmed command but can't work without human interaction.

Strong AI is an AI system with generalized human cognitive abilities, meaning it can solve tasks and find solutions without human intervention.

A self-driving car is an example of strong AI that uses a combination of computer vision, image recognition, and deep learning to pilot a vehicle while staying in a given lane and avoiding unexpected obstacles like pedestrians.

AI has made its way into a variety of industries that benefit both businesses and consumers, like healthcare, education, finance, law, and manufacturing.

In fact, many technologies incorporate AI, including automation, machine learning, machine vision, natural language processing, and robotics. The application of AI raises legal, ethical, and security concerns.

For instance, if an autonomous vehicle is involved in an accident, liability is unclear, and hackers are using sophisticated machine learning tools to gain access to sensitive systems. Despite the risks, there are very few regulations governing the use of AI tools, but experts assure us that AI will simply improve products and services and won't replace us humans anytime soon.

Emphasizing this, Adam Wisniewski underscores the necessity for strong traceability in AI, a vital tool to identify and rectify potential harms. Responsibility in AI decision-making involves a diverse group, including lawyers, regulators, AI developers, ethics bodies, and citizens, who collectively grapple with the aftermath of catastrophic consequences.

Fairness is another critical aspect, especially in datasets containing personally identifiable information, where biases related to race, gender, or ethnicity must be rigorously eliminated.

Addressing the potential misuse of AI algorithms requires proactive analysis during the design phase implementing safeguards to minimize risks. The advent of generative AI applications like ChatGPT and Dall-E adds a layer of complexity, introducing risks tied to misinformation, plagiarism, copyright infringement, and harmful content, posing additional challenges for ethical AI implementation.

How to Enhance the Ethical Considerations of Artificial Intelligence

Ensuring Ethical AI involves a blend of computer science proficiency and knowledge in AI policy and governance. Adhering to best practices and codes of conduct during system development and deployment is crucial and, at the same time, very important.

Proactively addressing ethical considerations early on is the most effective strategy to prevent unethical AI and stay ahead of impending regulations.

Regardless of the development stage, continuous efforts can enhance the ethical aspects of AI. This proactive approach is indispensable for safeguarding a company's reputation, ensuring alignment with evolving laws, and instilling greater confidence in AI deployment.

Below is a list of organizations, policymakers, and regulatory standards committed to advancing ethical AI practices.

Resources for Developing Ethical AI

AI Now Institute:

- Focuses on the social implications of AI and policy research in responsible AI.

- Research areas include algorithmic accountability, antitrust concerns, biometrics, worker data rights, large-scale AI models, and privacy.

- Report: "AI Now 2023 Landscape: Confronting Tech Power" provides insights into ethical issues for developing policies.

Berkman Klein Center for Internet & Society at Harvard University:

- Fosters research on ethics and governance of AI.

- Research topics include information quality, algorithms in criminal justice, the development of AI governance frameworks, and algorithmic accountability.

CEN-CENELEC Joint Technical Committee on Artificial Intelligence (JTC 21):

- An ongoing EU initiative for responsible AI standards.

- Aims to produce standards for the European market and inform EU legislation, policies, and values.

- Plans to specify technical requirements for transparency, robustness, and accuracy in AI systems.

Institute for Technology, Ethics and Culture (ITEC) Handbook:

- A collaborative effort between Santa Clara University's Markkula Center for Applied Ethics and the Vatican.

- Develops a practical roadmap for technology ethics.

- Includes a five-stage maturity model for enterprises and promotes an operational approach for implementing ethics akin to DevSecOps.

ISO/IEC 23894:2023 IT-AI-Guidance on Risk Management:

- Describes how organizations can manage risks related to AI.

- Standardizes the technical language for underlying principles and their application to AI systems.

- Covers policies, procedures, and practices for assessing, treating, monitoring, reviewing, and recording risk.

NIST AI Risk Management Framework (AI RMF 1.0):

- A guide for government agencies and the private sector on managing new AI risks.

- Promotes responsible AI and provides specificity in implementing controls and policies.

Nvidia NeMo Guardrails:

- Provides a flexible interface for defining specific behavioral rails for bots.

- Supports the Colang modeling language and is used to prevent undesired behavior in AI applications.

Stanford Institute for Human-Centered Artificial Intelligence (HAI):

- Offers ongoing research and guidance for human-centered AI.

- Initiatives include "Responsible AI for Safe and Equitable Health," addressing ethical and safety issues in AI in health and medicine.

"Towards Unified Objectives for Self-Reflective AI":

- Authored by Matthias Samwald, Robert Praas, and Konstantin Hebenstreit.

- Takes a Socratic approach to identify assumptions, contradictions, and errors in AI through dialogue on truthfulness, transparency, robustness, and alignment of ethical principles.

World Economic Forum's "The Presidio Recommendations on Responsible Generative AI":

- Provides 30 action-oriented recommendations for navigating AI complexities and harnessing its potential ethically.

- Includes sections on responsible development and release of generative AI, open innovation, international collaboration, and social progress.

In AI policy and ethics, several key players and initiatives took some actions to ensure the responsible development and application of artificial intelligence. The Partnership on AI to Benefit People and Society was formed by tech giants like Amazon, Google, Facebook, IBM, and Microsoft.

This non-profit aims to establish best practices for AI technologies, fostering public understanding and serving as a crucial platform for discussions.

Apple joined this alliance in January 2017, underlining a collective commitment to ethical AI advancement. Additionally, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems plays a pivotal role by creating and refining guidelines through public engagement.