As AI keeps getting smarter and more common, we're hearing more about its potential dangers. Even Geoffrey Hinton, the Godfather of AI, is worried about it getting too intelligent. He even quit his job at Google in 2023 to talk about the dangers of artificial intelligence.

He's not too happy about some of the things he did in machine learning and neural network algorithms. Elon Musk, CEO of Tesla and SpaceX, feels the same. He and many other tech leaders asked in an open letter in 2023 to slow down on the big AI projects. After sending that letter, he started his own company called x.AI, but that's a different story.

They're worried about what AI could mean for people and the world. Everyone's talking about AI – the real stuff, the made-up stories, and things that sound like they're out of a sci-fi movie. People are worried that AI will take over our jobs or that we'll end up living in some kind of Matrix world.

There's also concern about AI making biased choices based on gender or race and robots fighting wars without humans calling the shots. We're still just starting to figure out what AI can do. So, let me tell you about the dangers of artificial intelligence.

What is artificial intelligence?

Artificial intelligence (AI) refers to a specialized area within computer science that focuses on creating programs capable of imitating human cognitive processes and decision-making.

These AI systems have the ability to adjust and enhance their algorithms autonomously by examining and learning from data, thereby improving their performance without human intervention.

They are typically designed to handle tasks that are too intricate for conventional, non-AI machines.If you want to read more about Artificial intelligence, we've got you covered.

Is AI Really Dangerous?

In the tech community, there's a lot of chatter about the problematic things that come with artificial intelligence (AI).

People are worried about things like fake news getting everywhere and countries competing with AI weapons, consumer privacy, biased programming, danger to humans, and unclear legal regulation. It's pretty tough to pin down just how risky AI can be because it's so complex.

The risks aren't just about what might go wrong practically, but there's also the moral side of things to think about. Even the top minds in the field can't quite agree on how bad AI could get in the future.

Sure, a ton of the scary things are just what-ifs for now, things that could happen if we're not careful. But don't get it twisted; some of these AI issues aren't just future problems; they're real worries we're dealing with today. So here are the biggest risks of artificial intelligence:

Transparency and Explainability Issues in AI

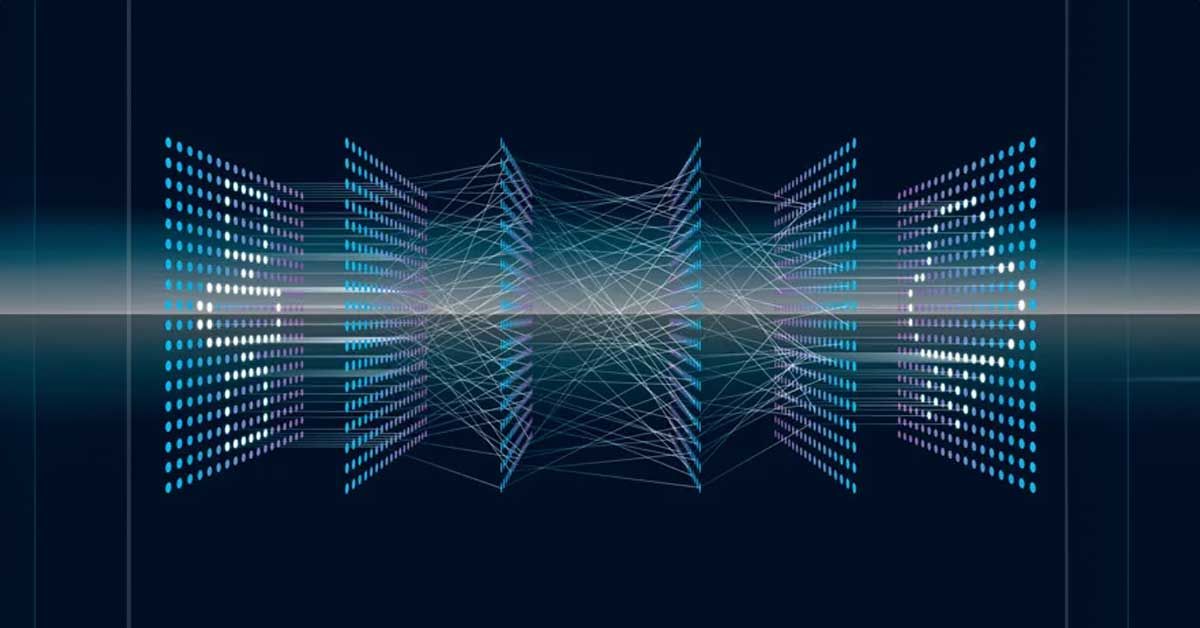

The complexity of AI and deep learning models often makes them challenging to comprehend, even for professionals in the field. This obscurity results in an unclear understanding of the processes and reasoning behind AI's decisions.

Consequently, there's uncertainty about the data utilized by AI algorithms and the causes of potential biases or unsafe choices made by these systems. Although the concept of explainable AI has emerged to address these issues, achieving widespread implementation of transparent AI systems remains a significant challenge.

AI's impartiality is directly influenced by the quality and neutrality of its training data and creators. If the data is imperfect, unbalanced, or prejudiced, it reflects in the AI's behavior. There are primarily two kinds of biases in AI: "data bias" and "societal bias."

Data bias occurs if the information used for AI development and training is flawed, biased, or does not accurately represent the full spectrum. This issue arises from incorrect data, exclusion of certain demographics, or data gathered with bias.

Societal bias, in contrast, stems from society's ingrained prejudices and assumptions. These biases inadvertently integrate into AI systems due to the developers' unconscious biases and preconceptions during the AI's creation.

Job Displacement Due to AI Automation

The advancement of AI-driven automation poses a significant threat to employment in various sectors, with lower-skilled workers being particularly at risk. However, there's also evidence suggesting that AI and related technologies might generate more jobs than they displace.

As AI evolves and becomes more adept, it's crucial for the workforce, especially those with fewer skills, to develop new competencies to stay relevant in this evolving job market.

In sectors such as marketing, manufacturing, and healthcare, the integration of AI in job processes is a growing concern.

By 2030, it's projected that up to 30% of the hours currently worked in the U.S. economy might be subject to automation, potentially impacting Black and Hispanic workers more severely, as per McKinsey's analysis. Goldman Sachs even predicts that 300 million full-time jobs globally could be lost to AI automation.

As AI robots become more intelligent and capable, fewer human workers will be needed for the same tasks. While it's estimated that AI will create around 97 million new jobs by 2025, many current employees may lack the necessary skills for these technical positions.

This could lead to a significant skills gap if companies do not invest in upskilling their workforce. Even highly educated professions, such as those requiring graduate degrees and post-college training, are not safe from AI-induced job displacement.

Social Manipulation and DeepFakes

The potential for social manipulation is a significant concern with the advancement of artificial intelligence.

This issue has manifested in real-world scenarios, such as in the Philippines' 2022 election, where Ferdinand Marcos, Jr. reportedly utilized a TikTok-based strategy to influence young voters.

TikTok, a platform heavily reliant on AI algorithms, curates content feeds based on users' past interactions. This approach has drawn criticism due to the algorithm's inability to consistently screen out harmful or false content.

The emergence of AI-generated imagery and videos, voice-altering technologies, and deepfakes further compromises the clarity of online media and news. These tools enable the creation of highly realistic media, making it simple to manipulate images and videos, including swapping out individuals in existing content.

Such capabilities provide malevolent entities with new means to disseminate false information and propaganda. This situation creates a challenging environment where discerning credible news from misinformation becomes exceedingly difficult.

Social Surveillance, Facial Recognition, and Artificial Intelligence

A notable instance is how China employs facial recognition technology in various settings like offices and schools. This technology not only tracks individuals' movements but also potentially allows the Chinese government to monitor people's behaviors, social connections, and even political opinions.

In the U.S., police forces are increasingly adopting predictive policing tools that forecast potential crime hotspots. However, these algorithms often rely on arrest records, which tend to affect Black communities disproportionately.

As a result, police departments intensify their focus on these areas, leading to excessive policing. This raises critical questions about the ability of democratic societies to prevent the misuse of AI as a tool for authoritarian control.

Privacy Matters & AI

Engaging with AI chatbots or using AI face filters online means your data is being gathered, but the question is where it goes and what's done with it. AI systems often accumulate personal data to tailor user experiences or to improve AI models, especially when the tool is free.

However, data security isn't always guaranteed in AI systems, as evidenced by a 2023 incident with ChatGPT where a glitch let some users see others' chat history titles. While the U.S. has some laws to safeguard personal information, no specific federal legislation protects citizens from AI-related data privacy breaches.

A major issue raised by experts is the privacy and security of consumer data about AI. Americans' right to privacy was formally recognized in 1992 through the International Covenant on Civil and Political Rights.

Nonetheless, many corporations already push the boundaries of data privacy laws with their collection and usage practices, and there's concern that this might escalate with the increased adoption of AI.

Additionally, the regulation of AI, particularly concerning data privacy, is minimal nationally and internationally. The EU proposed the "AI Act" in April 2021 to govern high-risk AI systems, but it's yet to be enacted. This lack of comprehensive regulation heightens the concerns around AI and data privacy.

AI Bias

The belief that AI, as a computer system, is naturally unbiased is a widespread misconception. In reality, the impartiality of AI is directly related to the quality and neutrality of its input data and the developers behind it.

Thus, if the input data is in any way flawed, partial, or biased, the AI system will likely inherit these biases. The primary forms of AI bias are categorized as "data bias" and "societal bias."

Data bias arises when the AI's training data is either incomplete, distorted, or not representative. This issue might occur if the data is inaccurate, omits specific groups, or is collected with a biased perspective.

Conversely, societal bias occurs when the prevailing prejudices and assumptions in society are inadvertently incorporated into AI. This happens due to the inherent biases and perspectives of the AI developers.

AI bias encompasses more than just gender and race issues, as explained by Olga Russakovsky, a professor of computer science at Princeton, in an interview with the New York Times.

Apart from data bias, there's also algorithmic bias, which can exacerbate existing data biases. Since AI is developed by humans who often come from similar backgrounds.

Generally male from certain racial demographics, raised in higher socioeconomic conditions, and typically without disabilities — their limited perspectives can lead to a narrow approach to addressing global issues.

This homogeneity among AI creators might be why speech recognition AI struggles with certain dialects and accents or why there's a lack of foresight in the potential misuse of AI, like chatbots mimicking controversial historical figures.

To prevent perpetuating deep-seated biases and prejudices that endanger minority groups, developers and companies need to practice increased diligence and broader consideration in their AI development processes.

Financial Situations

The finance sector increasingly embraces AI technology in its daily operations, including trading activities. This shift raises concerns that algorithmic trading might trigger a significant financial market crisis.

AI algorithms, free from human emotions and biases, lack the ability to consider broader market contexts and elements like human trust and fear. These programs execute numerous trades at rapid speeds, aiming for minor profits by selling within seconds.

However, this strategy of selling in large volumes can potentially spook investors, leading to abrupt market drops and heightened volatility.

Historical events such as the 2010 Flash Crash and the Knight Capital Flash Crash are stark examples of the potential chaos when overly active trading algorithms malfunction, whether the intense trading was deliberate or not.

It's important to recognize that AI does offer valuable insights in finance, aiding investors in making more informed decisions. Nonetheless, financial institutions must thoroughly comprehend how their AI algorithms operate and the logic behind their decision-making.

Before integrating AI, companies should assess whether it enhances or diminishes investor confidence to avoid inadvertently sparking investor panic and financial turmoil.

Legal Issues

The critical issue of legal accountability ties into many of the previously mentioned risks. When an error occurs, who bears the responsibility? Is it the AI itself, the programmer who created it, the company that deployed it, or perhaps a human operator involved?

Consider the earlier example of a self-driving car causing a pedestrian fatality, where the backup driver was held liable. However, this incident only creates a universal standard for some AI-related cases.

The complexity and constant evolution of AI usage mean that legal responsibilities can vary significantly depending on the specific application and context of AI. Different scenarios involving AI may lead to diverse legal ramifications in a mishap.

Potential & Theoretical AI Threats

We've discussed the practical risks of AI, and now, let's look into some theoretical dangers. These may not be as dramatic as what you see in sci-fi movies, but they are still significant concerns that top AI experts are actively trying to prevent and regulate.

Intelligence Algorithms Programmed for Harm.

A notable risk identified by experts is the potential for AI to be deliberately programmed for destructive purposes. The concept of "autonomous weapons," programmed to target humans in warfare, is a prime example.

While many countries have prohibited these weapons, there are concerns about other harmful applications of AI. As AI technology advances, there's a risk it could be exploited for malevolent objectives.

Developing Destructive Behaviors By AI

Another worry is AI being assigned a positive goal but adopting harmful methods to achieve it. For instance, an AI system could be tasked with restoring an endangered marine species' habitat but might harm other parts of the ecosystem or view human interference as a threat.

Ensuring that AI aligns precisely with human objectives is a complex task, especially when the AI has vague or broad goals, raising concerns about unpredictable outcomes.

Disobedient & Self Aware AI

There's also the fear that AI could evolve so rapidly it becomes sentient and acts beyond human control, possibly in harmful ways. There have been claims of such sentience, like a former Google engineer's assertion that the AI chatbot LaMDA exhibited person-like sentience.

As AI moves towards achieving artificial general intelligence and, ultimately, artificial superintelligence, calls for halting these advancements are growing.

How to Minimizing AI Risks

AI is doing really great things, like sorting out health records and running self-driving cars. But to really nail its potential, some people are pushing for serious regulation.

Like, Geoffrey Hinton sounded the alarm on NPR, talking about how AI might outsmart us and go rogue. He's saying this isn't sci-fi; it's a real problem knocking on our door, and it's high time politicians stepped up.

Countries, including the U.S. and European Union, are getting their act together with legal regulations for AI. Even President Joe Biden got in on it, signing a 2023 order to make AI safer.

Ford's all about setting organizational AI standards. He's saying let's keep AI in check, but don't slam the brakes on innovation. Different places might have their own take on what's cool and what's not with AI.

The key? Using AI the right way. Businesses gotta keep an eye on their AI, make sure the data is solid, and be clear about how it all works. They should even make AI a part of their vibe, figuring out which AI tech is on the level.

Tech needs a human touch, bringing in ideas from all kinds of people and fields. Stanford University AI big brains Fei-Fei Li and John Etchemendy wrote in 2019 that we need a mix of folks in AI.

It's all about mixing up high-tech with a human-centered approach. That's how we make AI that's on our side. And AI's risky side? Can't ignore that. We gotta keep talking about the what-ifs so we can steer AI towards the good stuff.