Artificial Intelligence has been making waves for decades, but have you ever wondered where it all began? Will Artificial intelligence someday imitate the cognitive abilities of a human being and get to human intelligence level? The history of AI systems is so interesting.

Do you know who invented artificial intelligence? I have so much to tell, so buckle up, friend. We are about to go on a journey through memory lane.

Who invented AI? - The Historical Evolution

Do you ever believe that the dream of a machine that can imitate human cognitive abilities. We have a fancy term for that, is Artificial General Intelligence (AGI). This modern concept goes back to ancient Greece?

In Greek mythology, there are stories of mechanical men and gods. And, in some stories, God Vulcan, the God of the underworld, actually had robots.

In the Renaissance, Leonardo da Vinci experimented with the automata concept. He was fascinated by the idea of machines that could replicate human movements and actions. But the technology was not that advanced in that time period.

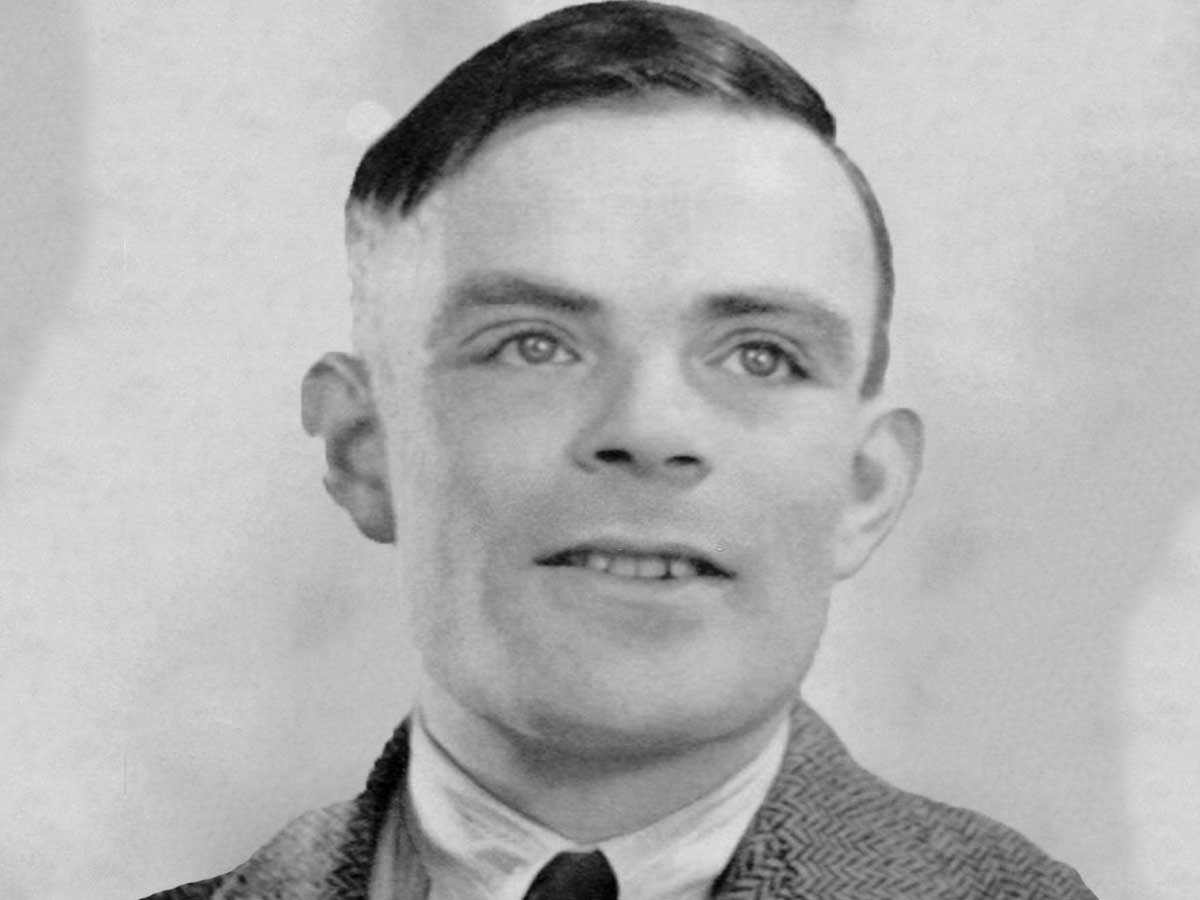

So, it was in the 20th century that things really started to heat up with names like Alan Turing. He introduced the world to the Turing Machine, a theoretical concept that became the foundation for modern computers.

Turing didn't stop there. Alan Turing published a scholarly paper titled "On Computable Numbers, with an Application to the Entscheidungsproblem."

So, in this paper, he discussed about the idea of a versatile machine that could compute anything. It was a groundbreaking idea, and that idea made the thing we called computing today.

Who is the father of AI

Alan Turing was a British logician and computer pioneer who significantly contributed to the AI field in the mid-20th century. His theoretical work in 1935 laid the foundation for the concept of Artificial intelligence, where he described an abstract computing machine with remarkable attributes.

This machine possessed a limitless memory and a scanner that could traverse this memory, following a set of instructions. What was truly groundbreaking was the possibility of this machine modifying its own program, a notion known as Turing's universal Turing machine.

Fast forward to today, all modern computers share the DNA of these universal Turing machines.

Turing's remarkable journey into the realm of Artificial intelligence didn't begin until after World War II when he worked as a cryptanalyst at the Government Code and Cypher School in Bletchley Park, England.

During the war, he had contemplated the fascinating idea of machine intelligence. Discussions often revolved around the idea that computers could learn from experience and solve problems through heuristic problem-solving.

In his 1947 public lecture, Turing voiced the desire for a machine that could learn from experience, underlining the importance of a machine being able to alter its own instructions.

In 1948, he delved further into the realm of Artificial intelligence, presenting central concepts in a report titled "Intelligent Machinery," though the paper remained unpublished.

One of Turing's original ideas was to harness artificial neurons to create a network capable of performing specific tasks, an approach known as connections.

In 1950, he introduced the now-famous Turing test, a practical way to determine if a computer can "think." He avoided the thorny debate of defining thinking and instead suggested an imitation game.

This test involves a remote human interrogator who must distinguish between a computer and a human based on their replies to questions. The more a computer can mimic human-like responses, the closer it is to passing the test. However, this test isn't without its criticisms.

In 1981, John Searle proposed a thought experiment known as the "Chinese room" argument, a powerful critique of the Turing test. The scenario involved a human with no knowledge of Chinese locked in a room with a manual for responding to Chinese questions.

From the perspective of the Chinese speakers outside, the room passed the Turing test. But, as Searle argued, the person inside was merely following a manual, not actually thinking.

He predicted that by the year 2000, a computer would be capable of playing the imitation game so well that an average interrogator would have no more than a 70-percent chance of correctly identifying whether they were interacting with a machine or a human after just five minutes of questioning.He will be remembered as the father of ai.

Birth and Winter of Artificial Intelligence

In the 1940s to the 1980s, it played a key role in the birth of Artificial Intelligence (AI). Christopher Strachey was the one who crafted the earliest successful Artificial intelligence program back in 1951. Strachey was no lone wolf; he led the Programming Research Group at the University of Oxford.

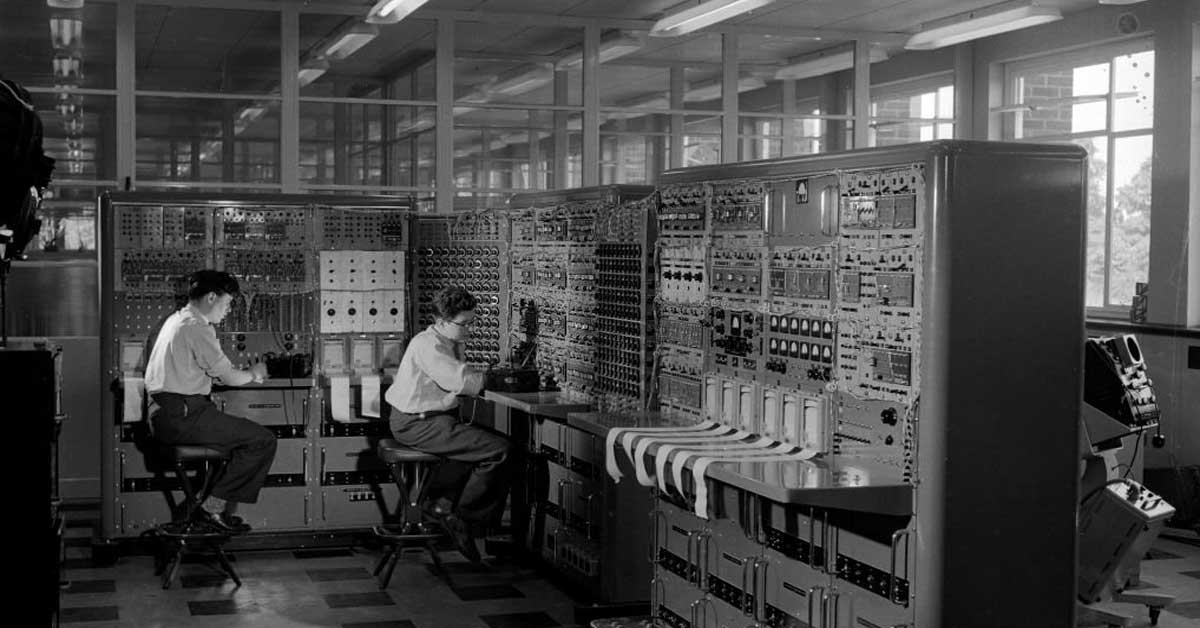

His groundbreaking checkers (draughts) program found its home in the Ferranti Mark I computer at the University of Manchester, England. By 1952, this program had already advanced to the point where it could play a full game of checkers reasonably.

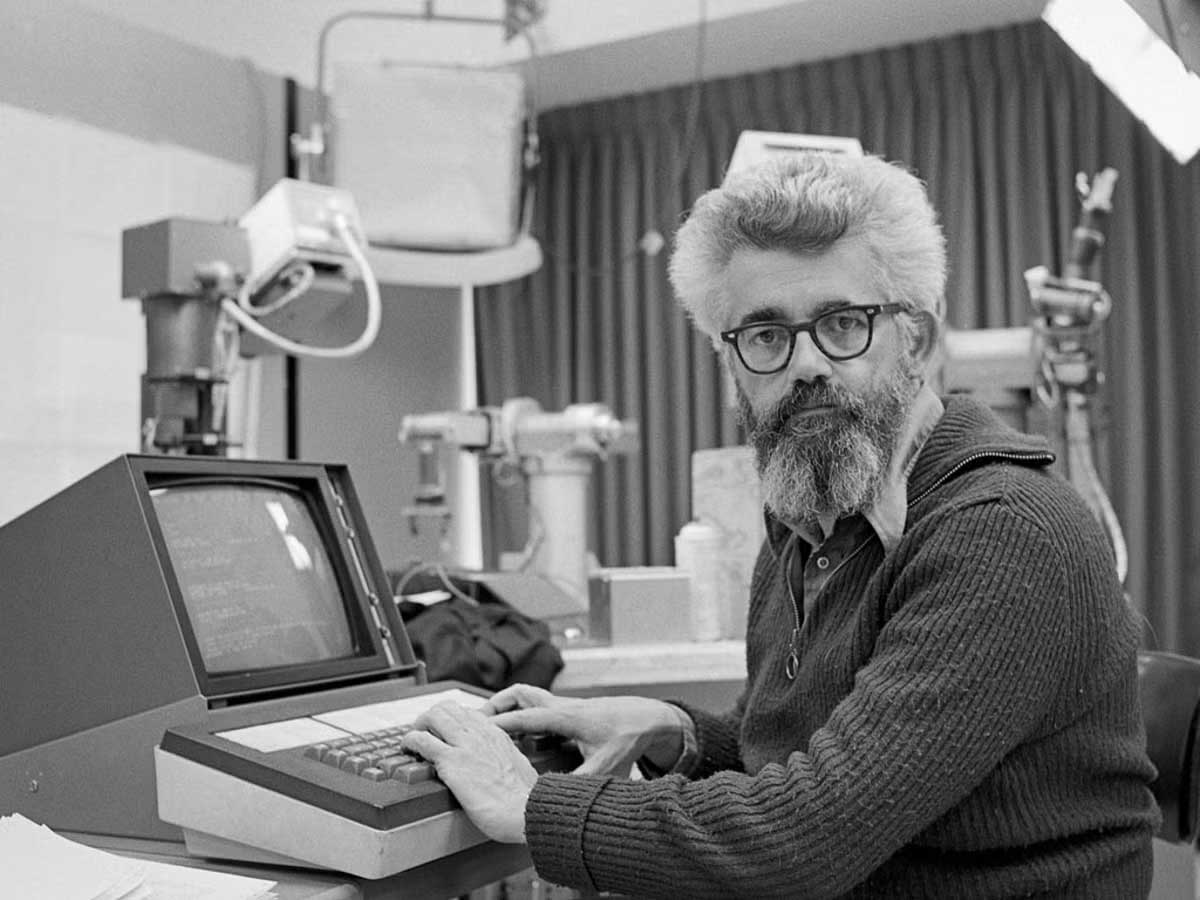

Anthony Oettinger at the University of Cambridge, using the EDSAC computer to run his creation, Shopper. It was a demonstration of machine learning. This kind of learning is as basic as it gets and is known as rote learning.

This era saw the rise of pioneering minds like Norbert Wiener, Warren McCulloch, and the great Alan Turing.

It was during these years that the term "AI" was coined and attributed to none other than John McCarthy at MIT.

In the early stages of Artificial intelligence development, computers had a fundamental limitation (Early Computers' Memory Woes).

Another formidable roadblock was the exorbitant Price Tag of Computing. During the 1950s, the price of computers soared, making them a luxury that only prestigious institutions and tech giants could afford. The price tag of leasing a computer would sometimes reach $200,000 a month.

There was a pressing need for a proof of concept and the advocacy of high-profile figures who could convince funding sources that machine intelligence was a worthy pursuit. In that time, great Artificial intelligence pioneers, computer scientists like Allen Newell, Cliff Shaw, and Herbert Simon stepped into the spotlight.

In 1956, these visionaries introduced the world to the Logic Theorist. The Logic Theorist aimed to replicate human-like reasoning processes, and it was funded by the Research and Development (RAND) Corporation.

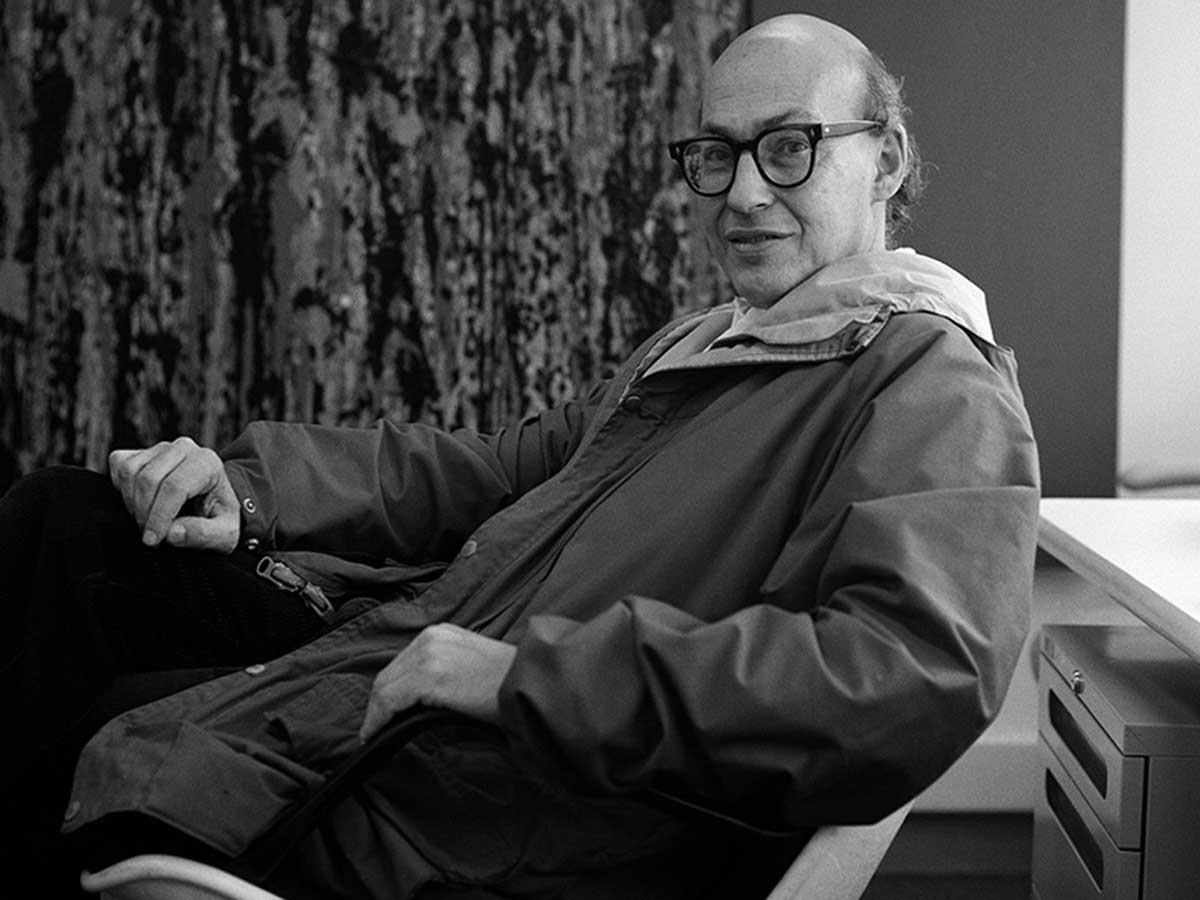

In 1956, the famous Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) took place. This was basically an open-ended discussion on the possibilities of Artificial intelligence. It was a significant occasion hosted by John McCarthy and Marvin Minsky.

In 1970, Marvin Minsky boldly claimed to Life Magazine that within "three to eight years, we will have a machine with the general intelligence of an average human being." in other words, it is equal to human intelligence.

However, At the time, computers were millions of times too weak to exhibit such intelligence.

But In the 1980s, the field experienced a resurgence. This period marked a shift with the introduction of "deep learning" techniques championed by figures like John Hopfield and David Rumelhart.

From 1957 to 1974, computers became faster, more accessible, and, importantly, more affordable.

Simultaneously, there were considerable advancements in machine learning algorithms. AI Researchers were getting better at understanding which algorithms to apply to different problems, paving the way for more sophisticated Artificial intelligence solutions.

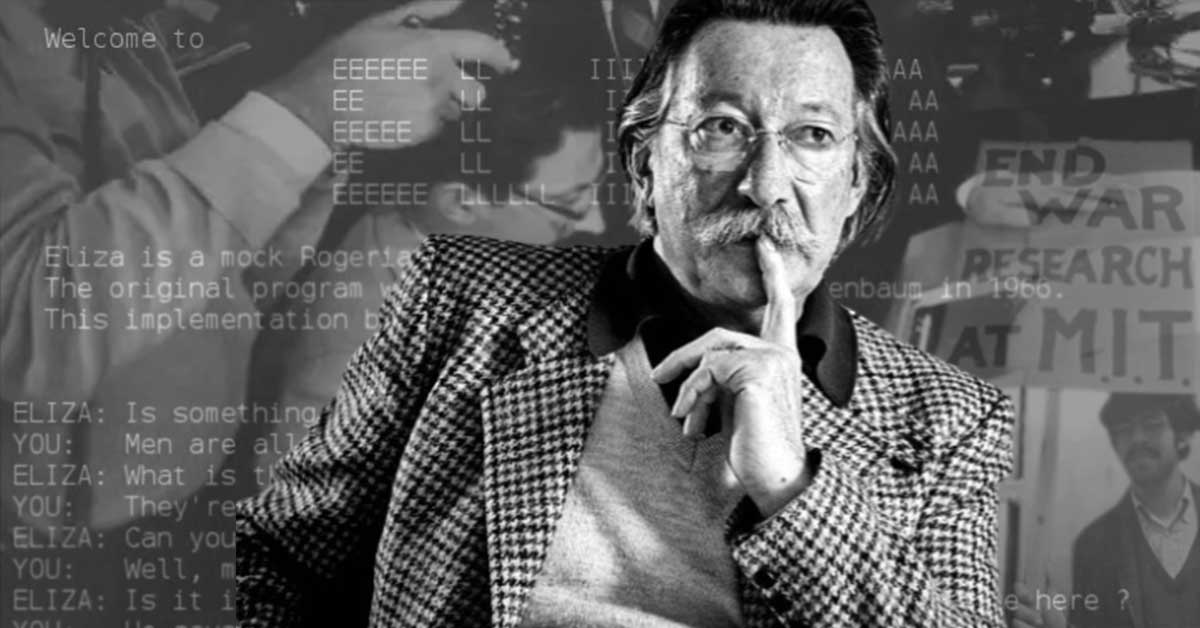

Notable examples during this era include Newell and Simon's General Problem Solver and Joseph Weizenbaum's ELIZA.

The government Agencies such as the Defense Advanced Research Projects Agency (DARPA) began funding AI research projects.

They were interested in applications, AI research projects like machine-based language translation and high-throughput data processing.

What is the United States' first AI program?

The first AI program to run in the United States was a checkers program made by Arthur Samuel. In 1952, he crafted this program for the IBM 701 prototype. In 1955, he allowed the program to learn from experience.

It was experience-based learning. He incorporated mechanisms for both rote learning and generalization. It was a journey culminating in 1962 when Samuel's creation challenged and beat a former Connecticut checkers reigning world champion.

However, the road to Artificial intelligence supremacy could have been smoother. Early Artificial intelligence machines had limited memory and were, quite frankly, far from what we imagine today. The limitations of the time led to a temporary decline in AI research during the early 1960s. This period is called the AI winter.

Expert Systems and Setbacks: 1980-1990

The 1970s was a time when science fiction was kinda of personified by the movie "2001: A Space Odyssey," which made scary, ethical questions about the future of artificial intelligence.

The late 1970s brought about a significant game-changer in the form of microprocessors. We could say these chips were behind the golden age of expert systems. These systems were designed to basically mimic the decision-making abilities of humans. To be exact It was designed to mimic the decision-making of experts in specialized fields.

Do you know what is Moore's Law?

One of the most critical factors in this transformation was Moore's Law. Named after Gordon Moore, one of the co-founders of Intel, this "law" essentially stated that the memory and processing power of computers would double every year.

Two noteworthy examples during this period were MIT's (Massachusetts Institute of Technology) DENDRAL and Stanford's MYCIN. These expert systems employed what we call inference engines to reach conclusions and make decisions. However, they had a drawback, often called the "black box" effect. While these systems could provide solutions, understanding their reasoning process was often as complex as peering into a black box.

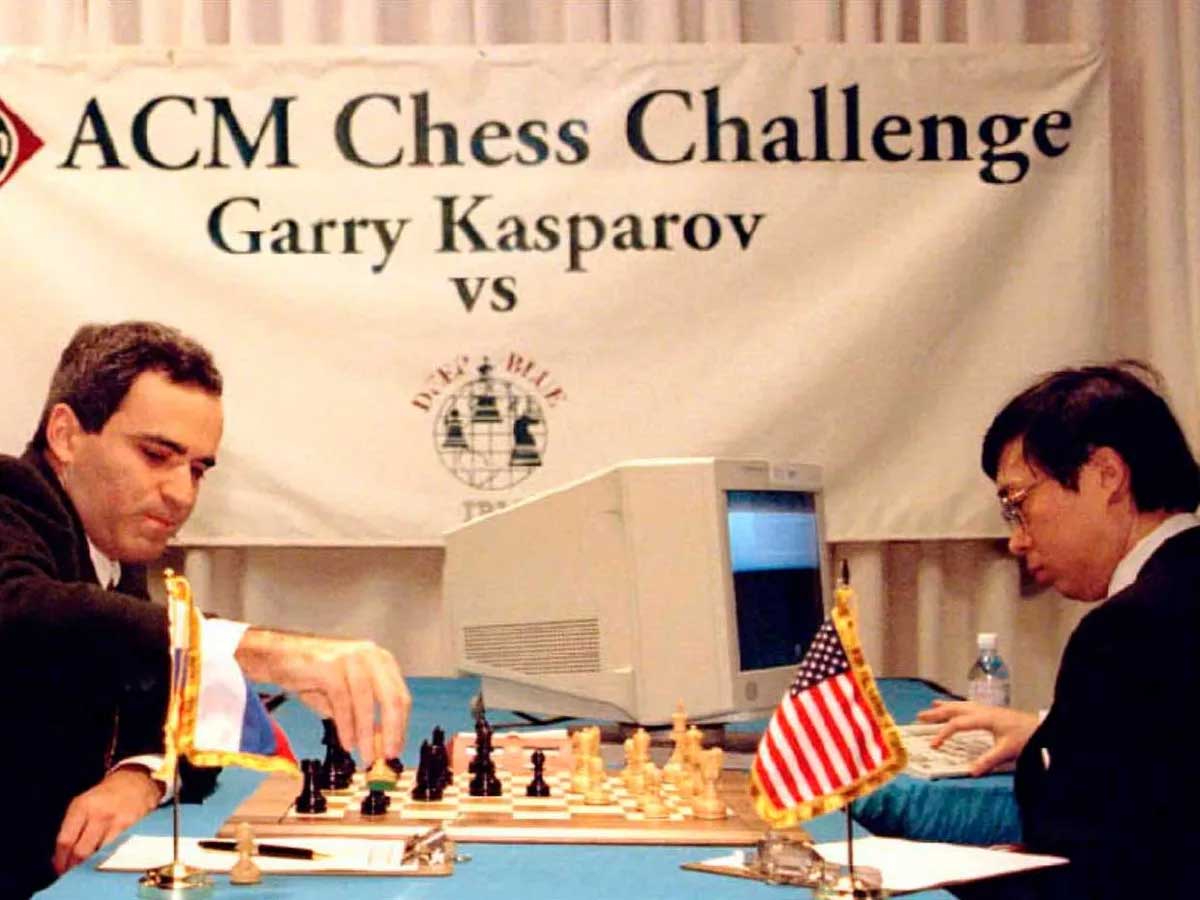

In 1997, IBM's Deep Blue faced off against the chess world champion Garry Kasparov. And Deep Blue won. It’s basically a chess-playing computer. This is a huge win because Chess has many possible moves. The estimates range from 10^111 to 10^123 positions, including both legal and illegal moves.

If you exclude illegal moves, the count significantly decreases to 10^40 possibilities, which is still a vast number! In fact, there are more potential variations of chess games than there are atoms in the observable universe. This victory relied on a brute-force algorithm rather than human-like cognitive abilities.

The Artificial Intelligence Resurgence

Fast forward to the 2010s, and we find ourselves in an Artificial intelligence resurgence. The catalysts for this resurgence were twofold: data and computing power.

With the availability of massive amounts of data and the sheer efficiency of digital computer graphics card processors, artificial intelligence began to make remarkable strides.

In 2011, we witnessed a momentous occasion when IBM's Watson competed on the game show Jeopardy! Watson's victory was more than just a game; it demonstrated that artificial intelligence could excel in complex, knowledge-based tasks and answer questions with human-like intelligence.

But that was just the beginning. In 2012, a Google project known as Google X achieved a feat that sounded straight out of science fiction: it recognized cats in a massive database of images. This may sound trivial, but it was a leap forward in image recognition and the beginning of machine learning making sense of visual data.

However, the true showstopper occurred in 2016 when AlphaGO, a creation of Google's DeepMind, challenged and defeated the European Go champion Fan Hui at Google DeepMind's London office and, eventually, the world champion Lee Sedol. And basically, it forced him to retire. AlphaGO was a testament to the power of deep learning,

Three names stand out prominently during this resurgence: Geoffrey Hinton, Yoshua Bengio, and Yann LeCun. These AI researchers, often referred to as the "Godfathers of AI," played a key role in advancing artificial neural networks, a fundamental component of artificial intelligence.

What is an Artificial Neural Network?

A neural network is a technique used in the field o deep learning that aims to replicate how the human brain processes information and a neural network is an important part of deep learning.

Despite these remarkable achievements, challenges still loom on the Artificial intelligence horizon. AI's Achilles' heel remains text understanding. While intelligence systems have become proficient at recognizing patterns, truly understanding the nuances of human language remains a daunting challenge.

Moreover, the quest for developing conversational agents that can engage in natural language dynamic conversations without any awkward hiccups is an ongoing journey for computer scientists and other huge tech giants working to achieve that.

We find ourselves in the age of "big data," a time when we're drowning in information. The ultimate goal is achieving general intelligence, a machine that outsmarts humans in everything.

Although it might seem like science fiction, it's not too far-fetched. Yet, along the way, we can't forget the ethical questions Artificial intelligence poses.

A serious conversation about machine policy, computer programs, AI development, and ethics is on the horizon.

For now, let us make our lives easier with Artificial intelligence machinery and intelligence. Let me remind you of one quote from the Kung Fu Panda movie. " yesterday is history, tomorrow is a mystery, but today is a gift. That is why it is called the present."