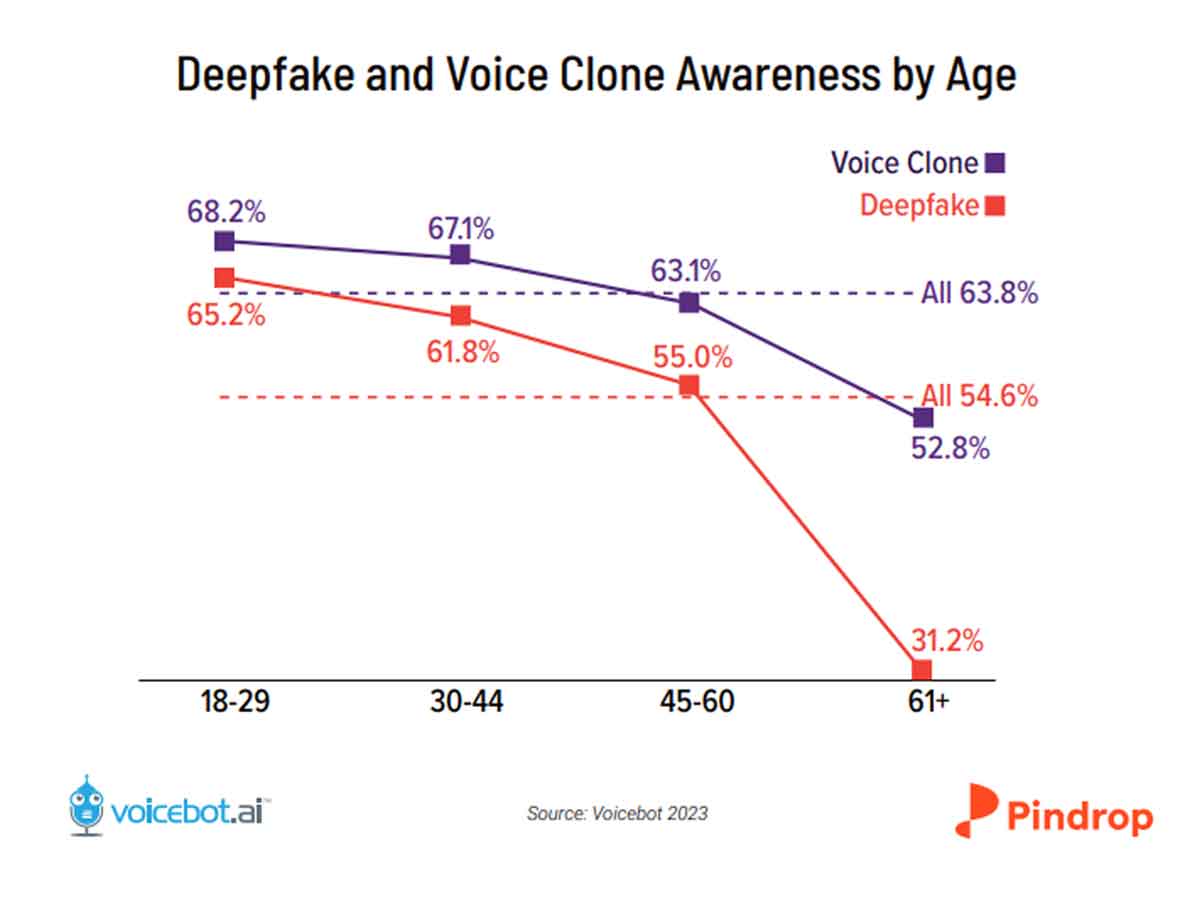

Artificial intelligence has become so skilled at mimicking people that telling what's genuine and what's fake has become difficult for almost half of the folks out there, according to surveys from Northeastern University, Voicebot.ai, and Pindrop.

Imagine waking up one day and finding your voice or face exploited by a sneaky AI on the internet. It's not a pleasant thought, right?

How Much People Are Aware About Deepfakes and Voice Cloning

In July 2023, Synthedia, Voicebot.ai, and Pindrop surveyed 2,027 U.S. adults aged 18 or older who participated in an online survey, giving us a pulse on the awareness of deepfake technology.

Representing the U.S. Census demographic averages, these participants, mainly online adults, constitute 95% of the population. Notably, over half of U.S. adults claimed to be in the loop about deepfakes, but it's a mixed bag. Over one-third admitted they haven't heard of it, and around 10.6% are unsure.

In this survey, Men are more aware of deepfakes, with 66% in the know, overshadowing women at 42%. Men seem more aware and more worried; they're 50% more likely to have created a deepfake. However, these figures stay under 10% of the aware group and a mere 4% of the entire U.S. adult population.

YouTube is the deepfake hotspot for men (52.4%) versus TikTok for women (43.1%). Switching gears, nearly two-thirds of U.S. adults are tossing around the term "voice clones," slightly outranking "deepfake". However, the clarity on what a voice clone really is remains murky, with definitions varying among consumers.

Despite this, the term has etched its presence across social media, traditional media, games, and even phone calls. Men, once again, take the lead in voice clone awareness (71%), expressing more concern and a 50% higher likelihood to create one, albeit staying under 10% of the deepfake-aware group and below 4% of the total U.S. adult population.

YouTube and TikTok remain battlegrounds for these voice-altering wonders, with men dominating the former (52.4% to 46.7%) and women shining on the latter (43.1% to 38.4%).

For celebrities like Scarlett Johansson, this nightmare became a reality when Lisa AI used her voice and face without permission to peddle an AI app.

Lots of people are getting this deepfake scam ad of me… are social media platforms ready to handle the rise of AI deepfakes? This is a serious problem pic.twitter.com/llkhxswQSw

— MrBeast (@MrBeast) October 3, 2023

That's not all—MrBeast faced a similar ordeal when an unauthorized deepfake had him endorsing $2 iPhones. Crazy, right? So, how do we fight back against an AI hijacking your voice for a deepfake?

Challenges of AI Mimicking Human Voices

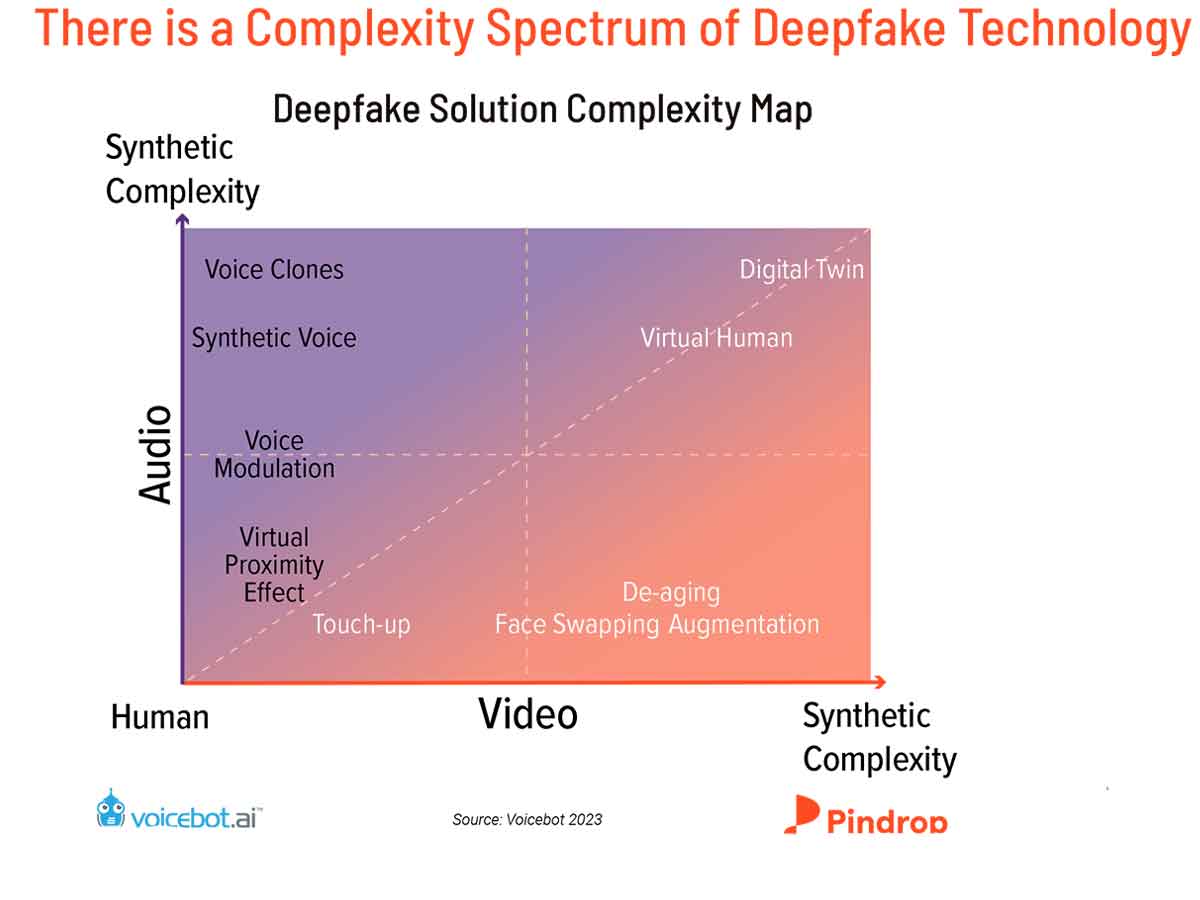

Speech synthesis or Text-to-Speech (TTS) is how written words are made into artificial human speech. It's been a game-changer, from helping folks with speech issues to powering your voice assistants.

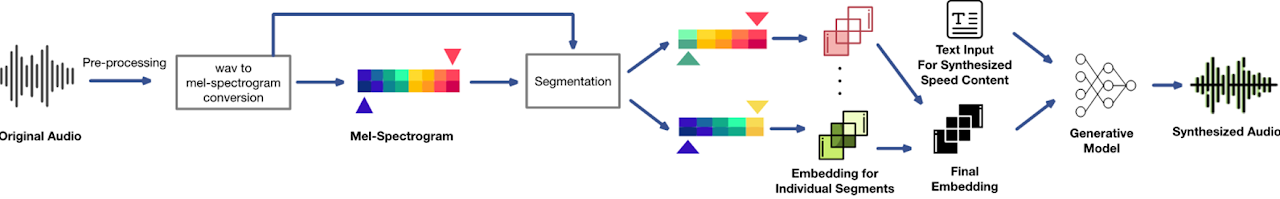

Deep neural networks (DNNs) are the technology behind speech synthesis or text-to-speech (TTS). This is how AI creates super-realistic speech, also known as "DeepFake" audio.

There are real-world threats posed by DeepFake. As an example, Imagine fraudsters using DeepFake to impersonate a CEO's voice, pulling off a slick $243,000 scam over a phone call. Fast-forward to 2023, and DeepFake audio is breaking into bank accounts, spreading misinformation, and even spewing hate speech disguised as your favorite celebrities.

How to Detect Deepfake and Synthesized Audio?

Nowadays, several methods are used to detect Deepfake and synthesize audio; the most popular method is embedding digital watermarks in videos and audio to identify AI-generated content. Examples of such technologies include Google's SynthID and Meta's Stable Signature.

Companies like Pindrop and Veridas take a different approach, analyzing subtle details, like synchronizing words with a speaker's mouth, to spot fakes. However, there's a catch: these methods work only on already published content, leaving a gap where unauthorized videos might remain online for days before detection.

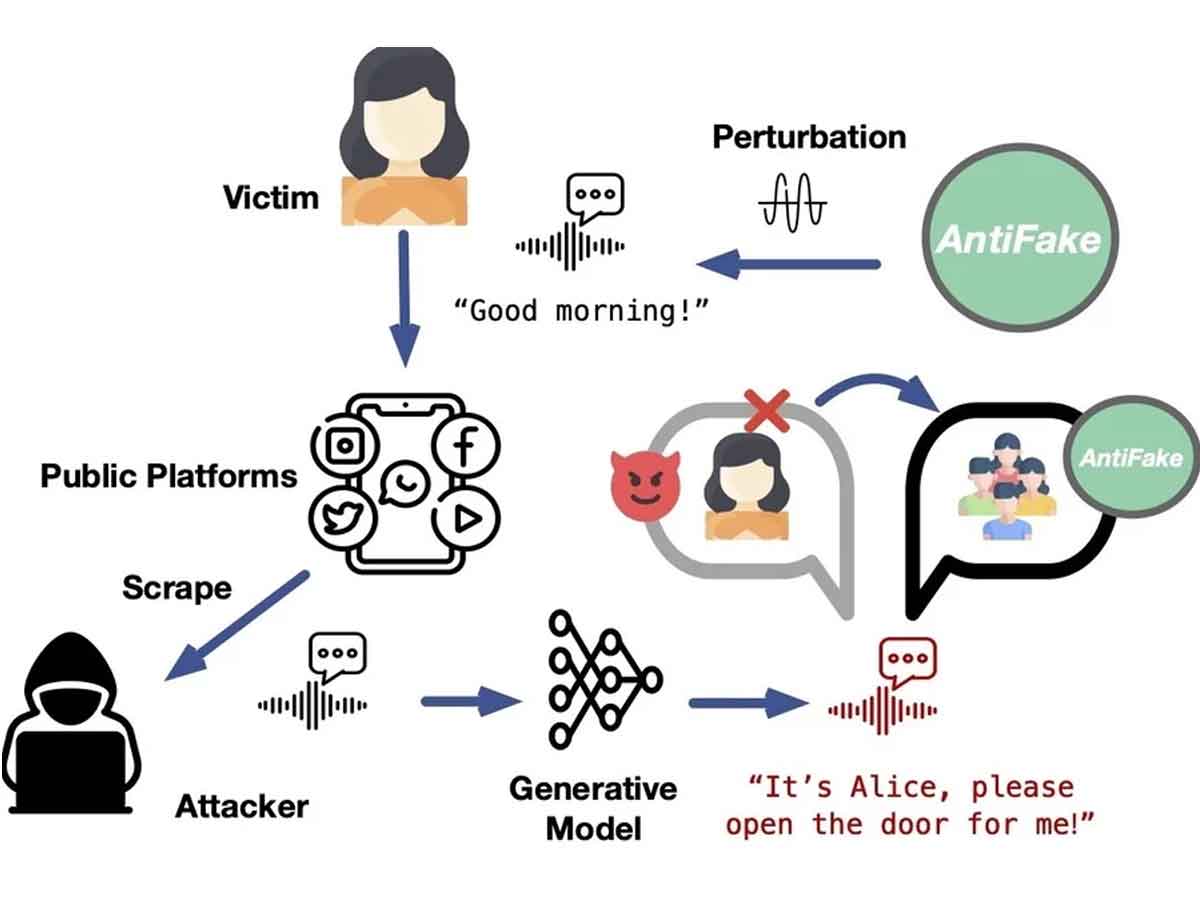

And there are tools like 'AntiFake,' which do the same thing but with multiple layers of advanced technology, and it's more about stopping it in its tracks. I'll explain how it works later.

As Siwei Lyu, a computer science professor at the University at Buffalo, points out, this delay can lead to potential damage. Even with the challenges, there's a recognition of the need for balance in addressing deepfake concerns.

Rupal Patel, a professor at Northeastern University and VP at Veritone, highlights the importance of not hiding the positive aspects of generative AI. This technology can aid individuals like actor Val Kilmer, who relies on a synthetic voice due to throat cancer, Or Just like the Beatles resurrect John Lennon for "Final Song" using Artificial intelligence.

Patel stresses the balance between protecting against misuse and ensuring the continued development of beneficial AI technology. It's about finding a middle ground to prevent throwing "the baby out with the bathwater," as she aptly puts it.

AntiFake Against AI Voice Hijacking

Ning Zhang, an assistant professor at Washington University in St. Louis, is the mastermind behind a groundbreaking creation—AntiFake. Drawing inspiration from the University of Chicago's Glaze, Zhang set out with a mission: to safeguard against the misuse of artificial intelligence.

How does AntiFake work, you may ask? It operates by skillfully scrambling signals, throwing a wrench into the plans of those attempting to generate effective copies. Before you hit the "publish" button on that video, AntiFake prompts you to upload your voice track, offering the flexibility of being a standalone app or a web-accessible tool.

AntiFake real human audio clipIt scrambles the audio signal, leaving AI models scratching their virtual heads. To the human ear, the modified track sounds perfectly normal, but to the system, it's a perplexing puzzle.

AntiFake scrambled audio clipUsability tests conducted with 24 human participants showcased its effectiveness, with an average System Usability Scale (SUS) score of 87.60 (±4.81) — a testament to its intuitive design and user-friendly interface.

Human-perceived speech quality scores averaged at 3.54 (±0.59), further solidifying its position as a user-preferred tool in the fight against digital deception. However, AntiFake can't protect widely available voices already circulating the web.

Preventing Deepfake Abuses - The Laws & Consent

The linchpin is consent when safeguarding ourselves from the potential harm of deepfake abuses. The U.S. Senate is in the throes of discussions surrounding the groundbreaking "NO FAKES Act of 2023."

This bipartisan bill, if passed, the proposed legislation aims to establish a federal law for the right of publicity, mitigating the complexities arising from varying state laws. As it stands, only half of the U.S. states boast "right of publicity" laws, each with differing levels of protection.

The discussion surrounding this act not only delves into legal intricacies but underscores the fundamental importance of consent in curbing the unauthorized deployment of individuals' likenesses.

In October, the act gained momentum, signaling a potential shift toward a more secure landscape against the perils of deepfake manipulations. Yet, the journey to a comprehensive federal law remains a complex narrative, one that hinges on bridging legal divides and prioritizing the right of publicity in the digital era.

While researchers discuss bipartisan bills to hold deepfake creators liable, new tools may allow scammers and cybercriminals to jeopardize voice authentication and personal data on social media platforms. Although significant tools like Antifake, Google's SynthID, and Meta's Stable Signature won't protect against all threats, emphasizing the need for vital cybersecurity measures.

The public must stay vigilant to detect fake online videos and protect against phishing attempts, ensuring the safety of personal and financial information.