Microsoft has entered the AI chip market by developing custom AI chips at Microsoft's AI Ignite Event. This move represents a strategic shift to reduce dependence on external partners like Nvidia for hardware and signals Microsoft's commitment to staying at the forefront of the AI boom.

While Google, Amazon, Meta, IBM, and others have also produced AI chips, Nvidia partnered with Foxconn today accounts for more than 70 percent of AI chip sales.

Microsoft introduced the Azure Maia AI Accelerator, meticulously optimized for artificial intelligence (AI) tasks and generative AI, alongside the Azure Cobalt CPU, an Arm-based processor tailored for general-purpose compute workloads on the Microsoft Cloud.

Azure Maia AI Chip and Azure Cobalt CPU

The Azure Maia AI chip and Azure Cobalt CPU are poised to debut in 2024. Microsoft's strategic move responds to the skyrocketing demand for Nvidia's H100 GPUs, which are essential for training generative image tools and large language models.

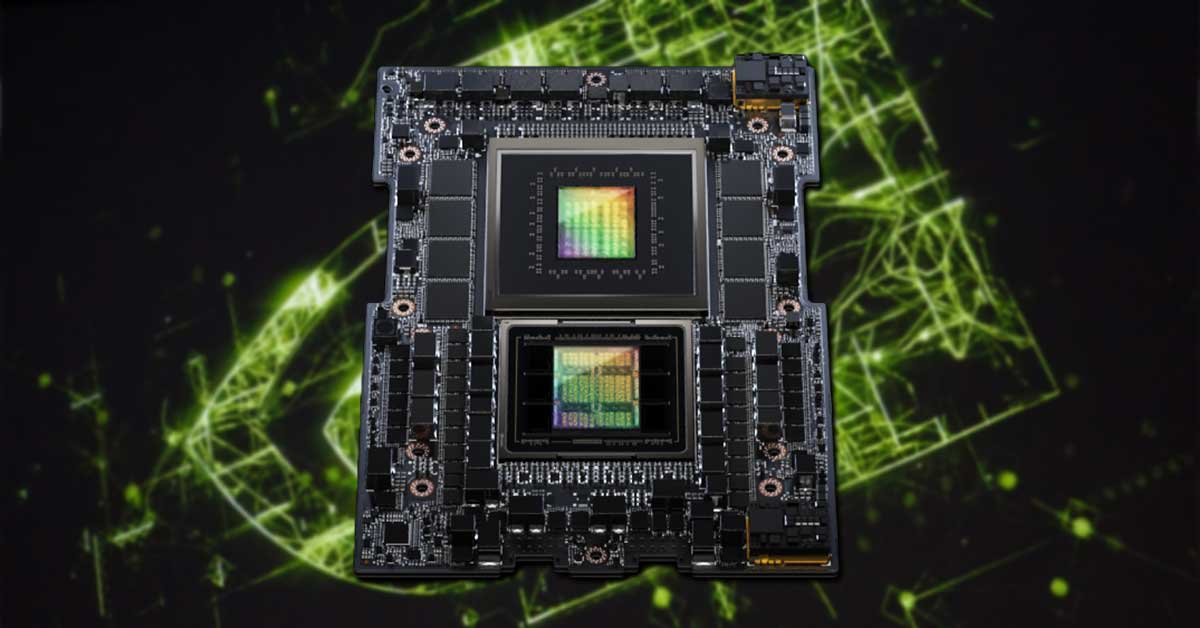

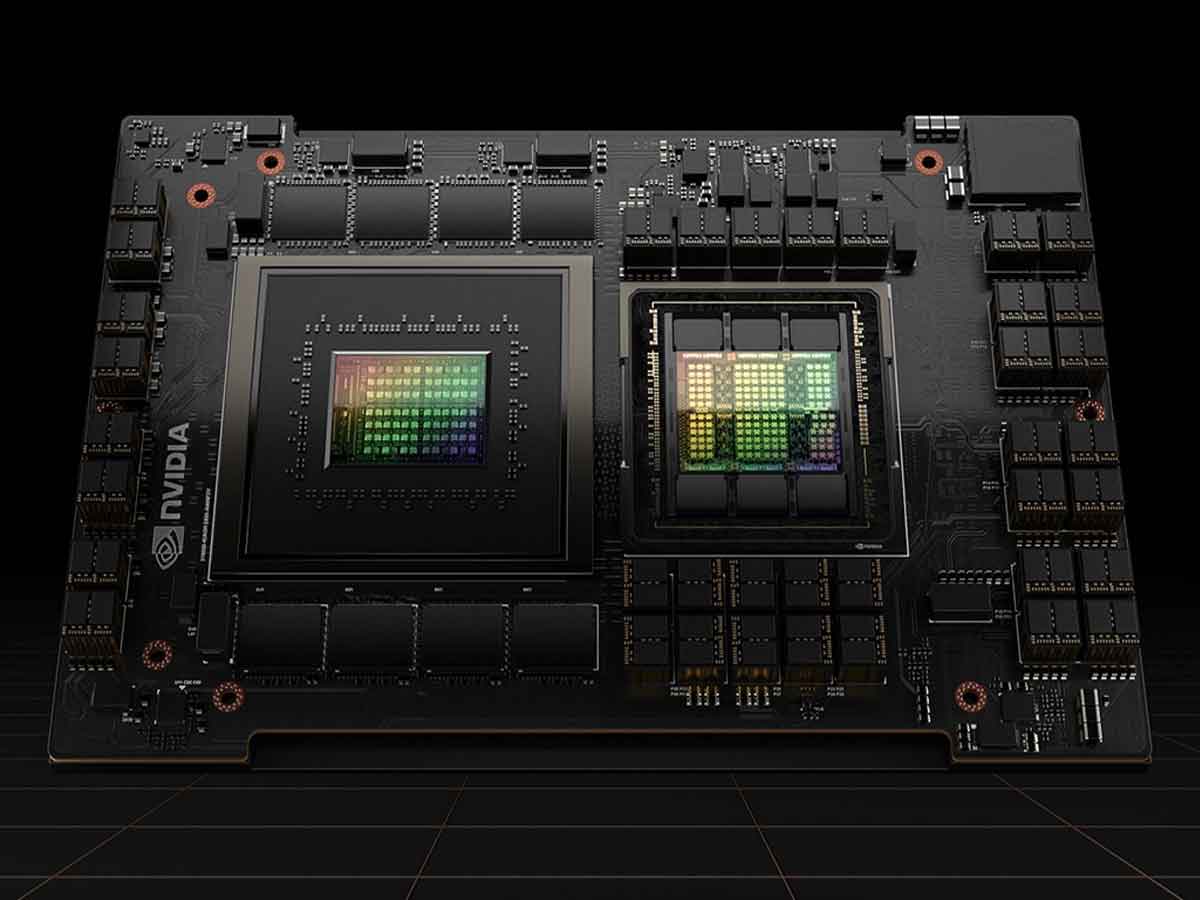

The surge in demand for Nvidia's H100 GPUs has reached unprecedented levels, with some units fetching over $40,000 on eBay. Nvidia recently announced an H200 chip specializing in AI and even got the GH200 super chip. The GH200 combines the H200 and 141GB of HBM3e with its 72-core, Arm-based Grace CPU and 480GB of LPDDR5X memory on an integrated module.

Technical Details, Benchmarks & specifications - Maia 100, Cobalt 100

Maia 100 Specifications

Technical details about Maia 100 are both intriguing and elusive. A 5-nanometer chip with 105 billion transistors, Maia is engineered specifically for the Azure hardware stack.

Microsoft hints at powering some of the most extensive internal AI workloads on Azure, encompassing Bing, Microsoft 365, and Azure OpenAI Service. The Cobalt 100 CPU, built on Arm architecture, aligns with Microsoft's sustainability goals by optimizing "performance per watt" throughout its data centers.

Cobalt 100 Specifications

Cobalt 100, Microsoft's energy-efficient 128-core chip, follows an Arm Neoverse CSS architecture. Optimized for efficiency and performance in cloud native offerings, it will power new virtual machines for customers in the coming year.

Microsoft keeps some of the specific technical details of Maia 100 and Cobalt 100 private, and it has not used public benchmarking test suites like MLCommons for Maia 100.

Microsoft's History in Silicon Development

Microsoft's journey in silicon development spans more than two decades, with significant contributions to chips for Xbox consoles and the Surface device lineup. The inception of custom chip development in 2017 marked a pivotal moment when Microsoft began architecting the cloud hardware stack.

The Azure Cobalt CPU is a 128-core marvel built on an Arm Neoverse CSS design tailored exclusively for Microsoft. The chip's design choices optimize cloud services on Azure.

On the other hand, the Maia 100 AI accelerator is meticulously crafted for cloud AI workloads. Fabricated on a 5-nanometer TSMC process, it boasts 105 billion transistors and supports sub-8-bit data types for swift model training and inference.

Did OpenAI help to design Microsoft's new AI chip?

Collaboration with OpenAI plays a pivotal role in Microsoft's chip development journey. OpenAI's involvement in designing and testing the Maia chip underscores the synergy between the two entities, powering multibillion-dollar workloads on Azure.

OpenAI's continued collaboration in providing feedback on Maia 100's design is a testament to the ongoing partnership initiated in 2020 for co-designing an Azure-hosted AI supercomputer.

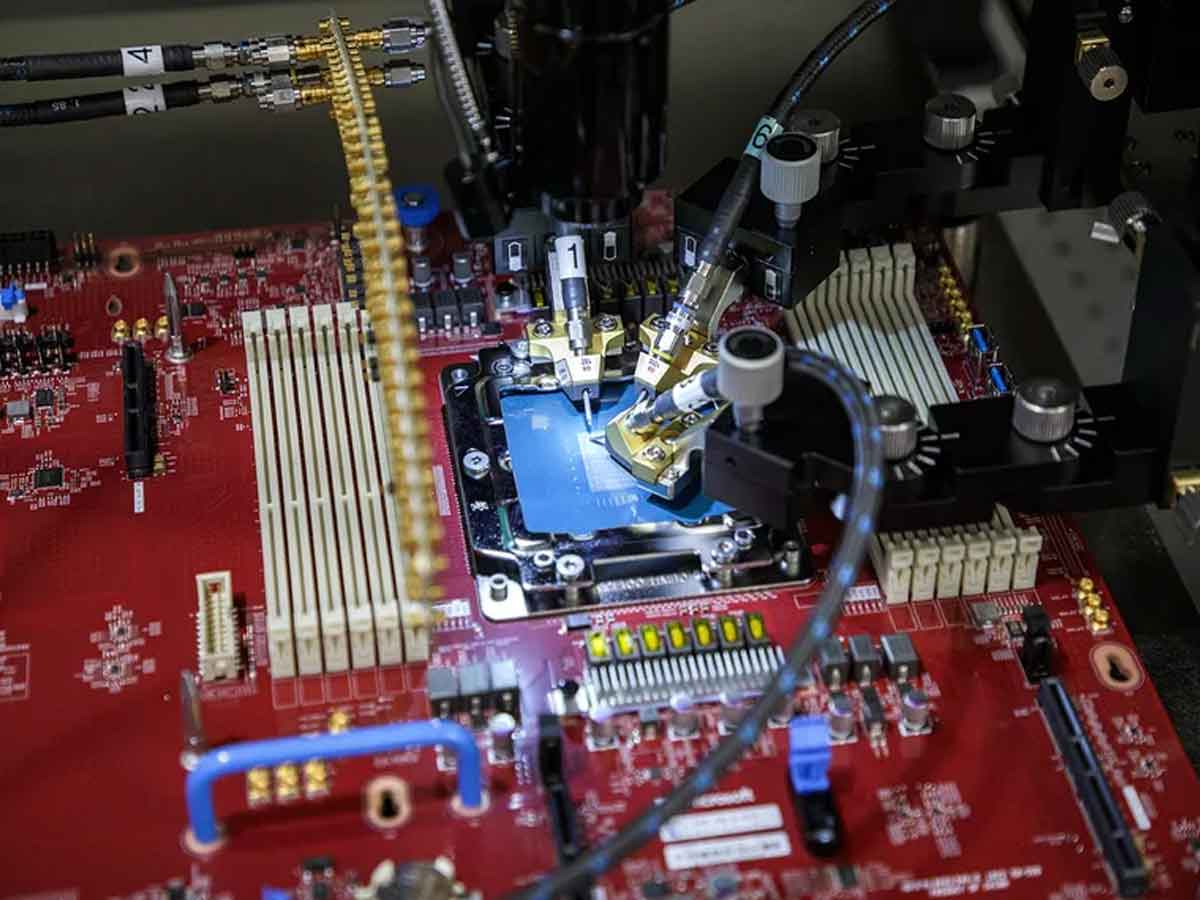

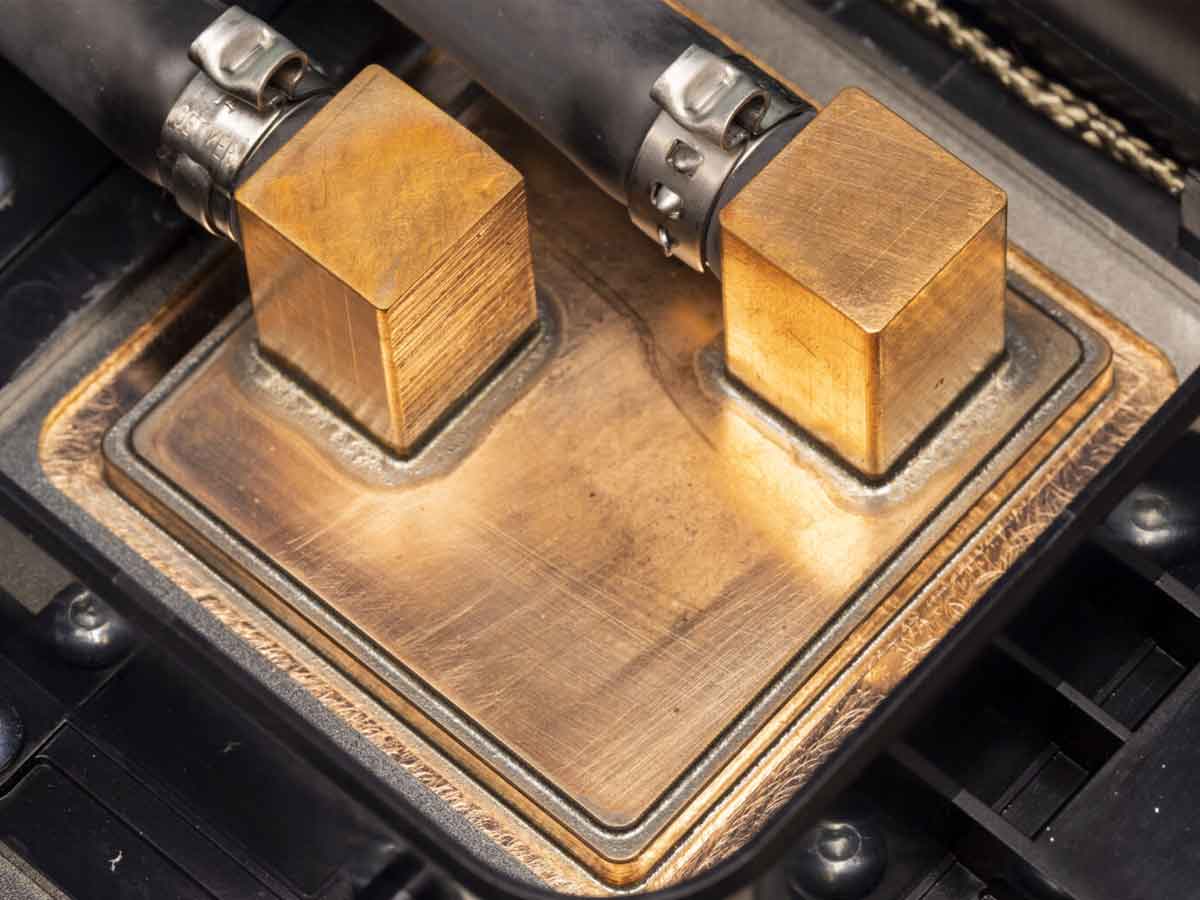

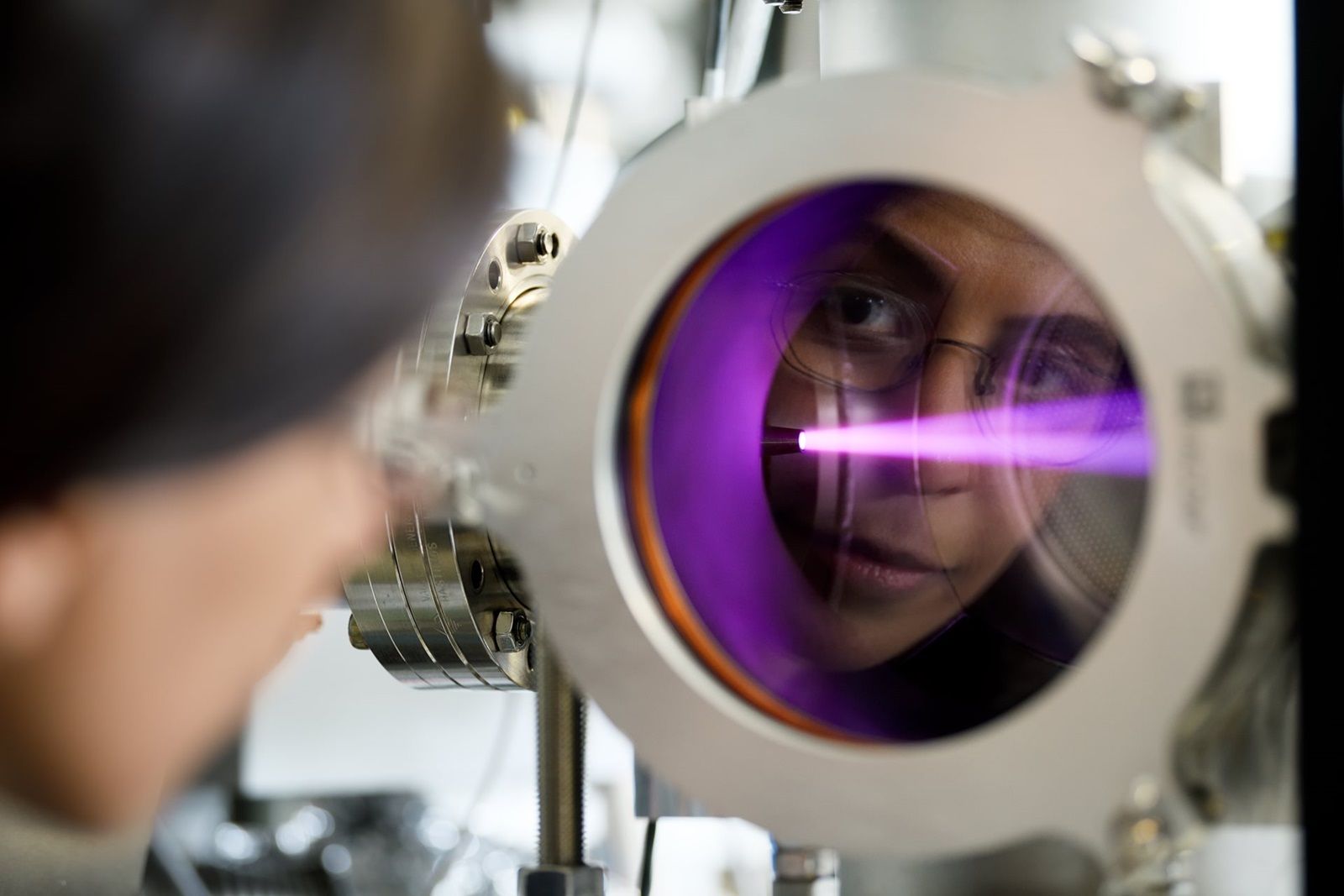

Liquid-Cooled Server Processor - Testing and Deployment

Maia is breaking new ground as Microsoft's first complete liquid-cooled server processor. This innovation enables higher server density with an efficient liquid-based cooling solution. Unique server racks and liquid chiller designs have been tailored to integrate within Microsoft's existing data center infrastructure seamlessly.

The Azure Cobalt CPU is undergoing rigorous testing on workloads like Microsoft Teams and SQL servers. Simultaneously, Maia 100 is being put through its paces with GPT 3.5 Turbo. Both chips have early-phase deployment plans.

Microsoft's decision to develop its own chips aligns with the broader strategy of diversifying supply chains.

The names Maia 100 and Cobalt 100 hint at Microsoft's commitment to ongoing chip development. While specific roadmaps remain undisclosed, the implication is clear – Microsoft envisions a series of chips beyond the initial 100 versions.

Microsoft's innovative solution involves liquid cooling, with a "sidekick" design to dissipate heat efficiently. The custom racks and sidekicks underscore the value of a systems approach to infrastructure, allowing Microsoft to control every facet, from chip design to data center cooling.

Microsoft's Impact on Pricing and AI Cloud Services

While Microsoft hasn't revealed new server pricing details, the introduction of Copilot for Microsoft 365 at a $30-per-month premium per user hints at the potential influence of Maia and Cobalt chips on AI cloud services' pricing.

Despite venturing into in-house chip development, Microsoft collaborates with industry giants like Nvidia, AMD, Intel, and Qualcomm.

Ongoing developments in Copilot features and a Bing Chat rebranding position Maia as a key player in balancing the escalating demand for AI chips.

AI Models, GPU Shortages and Challenges

Most companies in the AI domain, developing models like ChatGPT, GPT-4 Turbo, and Stable Diffusion, heavily lean on GPUs. These GPUs, with their parallel computation capabilities, are integral for the training and operating of advanced AI models.

The need for high-performance AI cards, particularly from Nvidia, poses significant challenges for the AI industry. Reports indicate that Nvidia's best-performing AI cards are sold out until 2024.

However, the CEO of chipmaker TSMC expressed a more pessimistic view, indicating that the scarcity of AI GPUs is raising concerns about a potential extension of the shortage into 2025. Microsoft plans to expand its options with second-generation Azure Maia AI Accelerator and Azure Cobalt CPU series versions.

Microsoft also said that it will offer its Azure customers cloud services that run on the newest flagship chips from Nvidia and Advanced Micro Devices next year. Microsoft said it tests GPT 4 - OpenAI's most advanced model - on AMD's chips.