You might have heard about large language models (LLMs), Especially since you are into tech, but do you know what they are and how they're shaping the world of artificial intelligence (AI)? Sounds a bit crazy, right? Let me take you on a journey.

LLMs and these Artificial intelligence (AI) systems basically use deep learning, machine learning methods, and extensive amounts to comprehend, condense, create, and forecast fresh content.

If you think about it, Spoken human languages are the basis for human and technological communications. In AI, language models like LLMs serve a similar purpose, but they're like language wizards on some sort of steroids. They can understand input data, interpret, and generate text like magic with the power of machine learning.

That is the basis of LLMs, and now we are about to go back in time because we have to start from the beginning.

What are large language models (LLMs)In Artificial intelligence?

Definition of a large language model (LLM) is a deep learning algorithm that can perform various natural language processing (NLP) tasks. LLM use massively large amounts of data sets to understand, summarize, generate, and predict new content.

So, how do these Large Language Models (LLMs) work? It begins with training. LLMs learn from massive data sets, often called a 'corpus' in AI. So it's basically a huge library of text from books, articles, from the internet.

The training journey starts with unsupervised learning. In this phase, LLMs are exposed to the world of text and prompts. They analyze sentence after sentence, learning the structure of language, the meaning behind words, and the connections between them.

As they mature, they switch to self-supervised learning. Now, they're predicting the next word in a sentence, understanding context, and making sense of nuances.

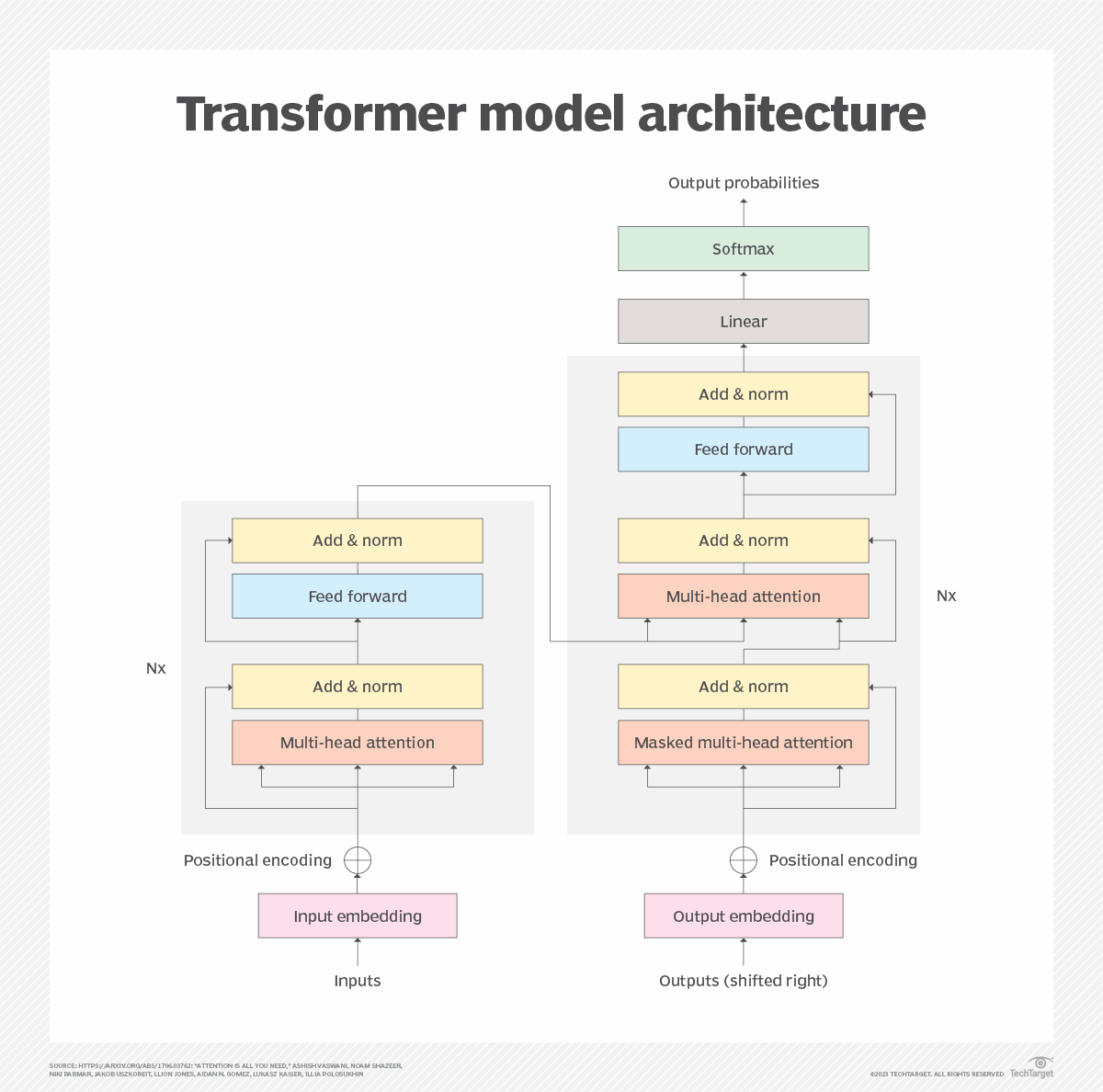

Here's where the magic kicks in – LLM relies on a special type of neural network called transformer. Transformers enable the LLMs to process vast amounts of text quickly and efficiently.

The core of this process is called 'self-attention'. LLM use self-attention mechanisms to determine how different text parts relate to each other. This understanding of context is what sets them apart. They get why the end of a sentence connects to the beginning, how sentences in a paragraph are related, and the whole web of language intricacies.

Once they're all trained up, LLMs can generate responses. But remember, it's not magic; it's a blend of input data, algorithms, and neural networks.

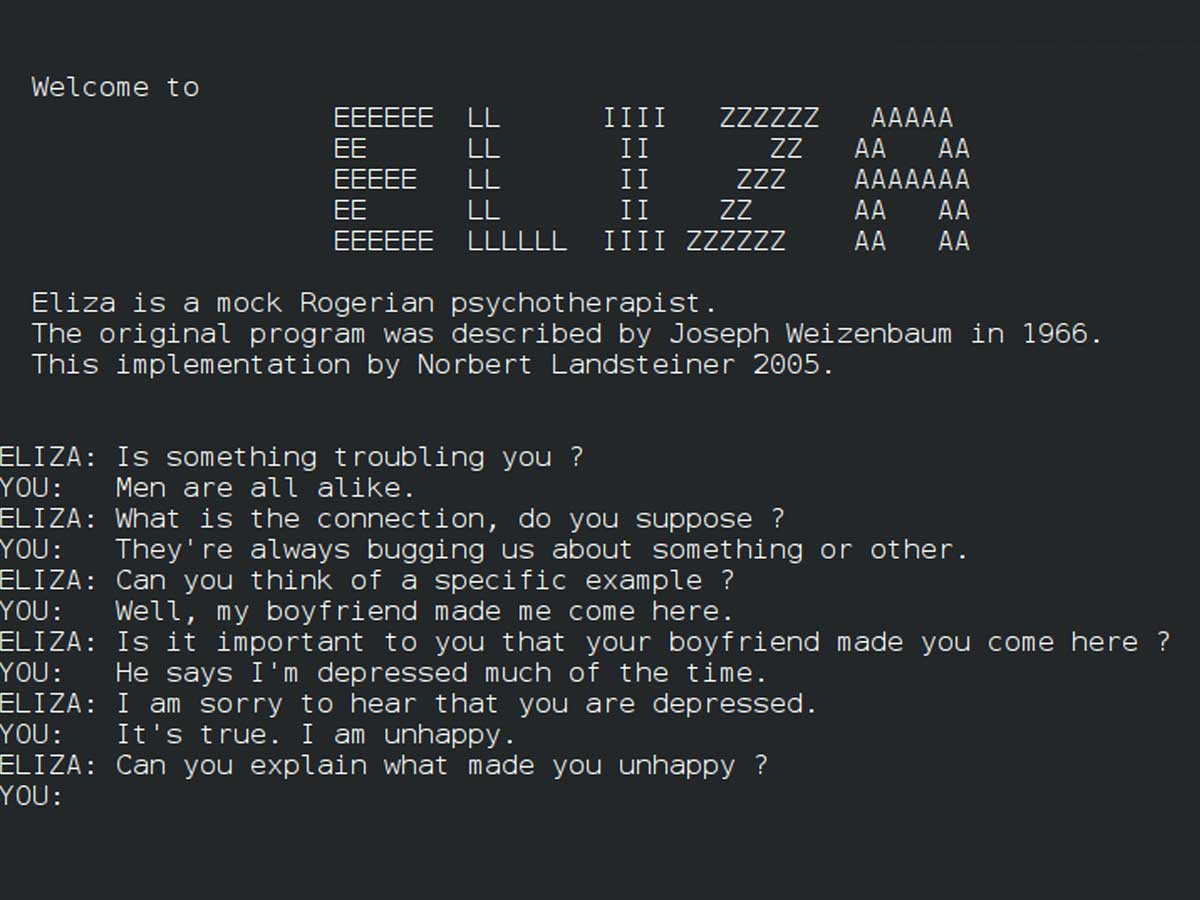

Eliza The First Language Model

Eliza, often seen as the first-ever AI language model, debuted in 1964 at MIT. In my opinion, Eliza is basically laid the path for present and future language models.

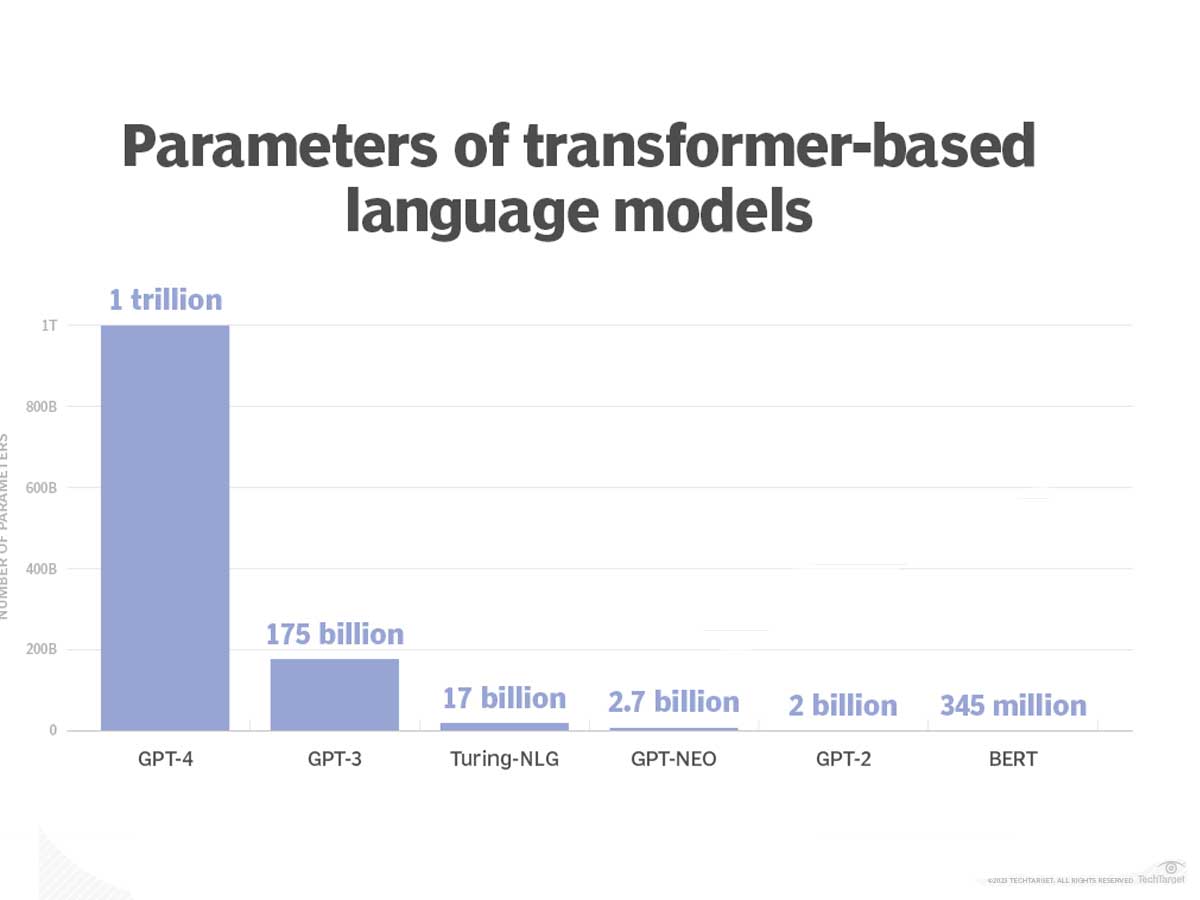

Fast forward to 2017, the scene changed with the emergence of modern Large Language Models (LLMs) powered by Transformer models, often called transformers. These models were packed with an abundance of parameters.

Some of these LLM gained the "foundation models" title from the Stanford Institute for Human-Centered Artificial Intelligence in 2021.

If you wanna read more about transformers, you can read our Artificial General Intelligence article or Google DeepMind - AlphaMissense articles.

What are large language models used for?

Large Language Models (LLMs) are versatile and can do various Natural Language Processing (NLP) tasks.

One of their main abilities is text generation. You give them a prompt or a question, and they can craft detailed responses, write essays, or even compose poems.

They're also language wizards when it comes to translation. LLMs can make it happen whether you want to turn English into French, Mandarin into Spanish, or any language combo you can dream of. That is how YouTube's AI-powered dubbing Service and Spotify's New AI Voice Translation Tool work.

LLMs excel at content summarization. They can sift through mountains of text and extract the most critical information, saving you time and effort.

Sentiment analysis? They're pros at that, too. LLMs can gauge the sentiment in a text, whether positive, negative, or somewhere in between. It is invaluable for businesses to understand what people say about their products or services.

But wait, there's more! They're the brains behind conversational AI and chatbots like ChatGPT. You may have chatted with Bard or similar AI-driven entities online, assisting you with queries, guiding you through websites, analyzing input data, or even providing some friendly banter.

Real-world examples? You got it. Think of ChatGPT by OpenAI or the powerful GPT-4. Google has its own AI linguist, LaMDA, while Hugging Face offers BLOOM and XLM-RoBERTa. Nvidia brings NeMO, and there's a whole bunch of others out there, each with unique talents. In the future, you might even need any programming language to code.

Now, even learning models and AI are used in health as well. We previously talked about the Chan Zuckerberg Initiative (CZI) and how clinics and hospitals are already testing the potential of AI for medical purposes with Med-PaLM 2.

Advantages and Limitations of LLMs

Large Language Models (LLMs) are like the superhero sidekicks of the artificial intelligence world. They come with incredible strengths but also have their Kryptonite.

Let's start with the use case and how to use llms. LLMs offer a truckload of advantages. First up, they're flexible. They're extensible. You can build on top of them to create customized AI that suits your needs.

Performance-wise, LLMs are lightning-quick, delivering rapid, accurate responses. You don't have to wait for them to think; they're on it.

These superhero-like abilities come with their own set of challenges. First, there's the issue of development costs. Building, fine-tuning, and maintaining these AI powerhouses is a costly undertaking.

Operational costs are another concern. Running LLMs demands serious computational power. Think server farms humming away 24/7. That electricity bill adds up.

Now, let's talk about the elephant in the room: bias. LLMs can sometimes churn out biased or unfair content.

This happens because they're trained on vast, unlabeled data input from the internet, which is only sometimes sunshine and rainbows. They may give answers that are unfair or discriminatory, and that's not something we want.

Then there's the concept of hallucination. It's not about seeing pink elephants but about LLMs making stuff up. They can generate information that sounds good but is entirely fabricated. It's not ideal if you're looking for facts. And there's the concern about the Modle collapse, AI Cannibalism, too.

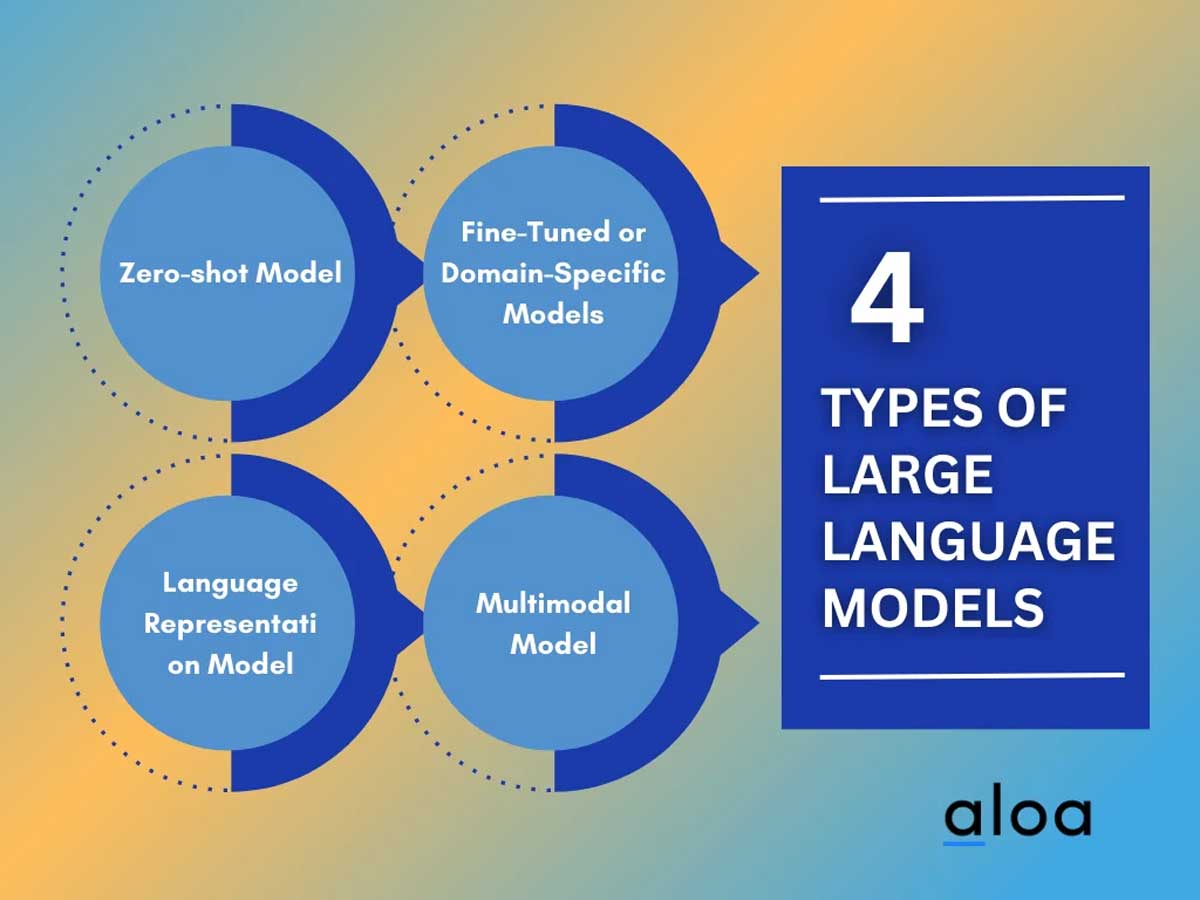

What are the different types of large language models?

When it comes to Large Language Models (LLMs), there are a few different types for different tasks. You already might have used some types because we are in an AI boom, so generative AI is very popular.

1. Zero-shot Models: These LLMs are the daredevils of the AI universe. They don't need specific training for tasks. You can throw them a question, and they'll take a swing at it.

2. Fine-tuned Models: fine-tuned models are specialized. They undergo targeted training to excel in specific tasks.

3. Language Representation Models: These LLMs are all about understanding language at its core. They're like the grammar gurus of AI.

4. Multimodal Models: These models are the rockstars of LLMs. They're not limited to text; they can handle images, audio, and more.

So, it's a bit like having a toolkit with different tools. You pick the one that suits the job or for your input. Whether it's a quick answer, specialized knowledge, or just making sure your text sounds good, there's an LLM for the job.

The future of large language models in Artificial intelligence

Alright, folks, it is time to go to the future and peek into what's in store for Large Language Models (LLMs) and the future use of LLMs. Trust me, it's exciting!

LLMs are on a quest for constant enhancement. They'll keep getting smarter, which means more accurate and reliable information for us. LLMs are on the brink of revolutionizing business applications, too.

But here's the catch – the accuracy of LLMs matters a lot. No one wants misleading information. So, the focus is on addressing data accuracy and bias. Developers are working hard to ensure that LLMs give you trustworthy information.

More efficient LLMs and domain-specific LLMs are also becoming a thing now.

LLMs encounter cybersecurity challenges, much like everything else on the internet. As our dependence on LLMs grows, the imperative to enhance their security intensifies.

They can be susceptible to vulnerabilities, including bugs and manipulation, underscoring the critical need to safeguard sensitive information.

AI governance is a thing now, and It's all about creating rules and standards for the AI world. Like Uncle Ben in Spider-Man said, "With great power comes great responsibility." Governments and organizations worldwide are working to ensure that AI is used safely and fairly.