Are you familiar with the word Singularity? If you are a SciFi Genere fan you might already heard of that word. Did you know that concept can be related to Artificial intelligence as well? Let me tell you more about the artificial intelligence singularity.

What is Singularity?

In technology, Singularity envisions a potential future wherein the relentless and irreversible advancement of technology leads to profound and unpredictable transformations in our reality.

The term "singularity" holds various meanings in science and mathematics, with its interpretation depending on the specific context. In natural sciences, for instance, Singularity refers to dynamic and social systems where even a minor alteration can yield significant and far-reaching consequences.

Imagine intelligent technologies so advanced that reality undergoes radical and unpredictable makeovers. This could be chaotic, but at the same time, we don't know exactly what will happen. This term singularity is used in many fields.

In the natural sciences, it means creating ripples with even the slightest changes through dynamic and social systems. The roots of its technological use trace back to physics, gaining fame in Albert Einstein's 1915 Theory of General Relativity. In physics, a singularity is the middle of a black hole.

One of the apprehensions that people have about this technological Singularity is really a metaphor, the metaphor borrowed from physics to describe what happens when you go through a black hole .

Hole the Singularity, the laws of physics as we know them kind of collapse or implode. They no longer apply a great metaphor that we borrow to use to describe what can happen with technology.

The term has a new meaning, signaling extreme unknowns and a one-way street of irreversibility. But now artificial intelligence (AI) is advancing so fast because of machine learning.

This brings us to the hypothetical point when AI algorithms isn't just smart but accelerate and optimize to a level beyond human intelligence comprehension.

Now, before we go further, let me explain the term "singularity" in other fields because it has different meanings too.

Apprehensions and Futuristic Visions

When it comes to technological singularity, a bag full of worries, questions, and uncertainties that come with the territory.

The Terminator scenario. Yes, the one where AI turns against humanity. Most people are afraid that one day Artificial intelligence will attack us in physical robot form, but Some people argue it's just an erroneous perspective. Optimistic futurists, like Kurzweil and Kevin Kelly, suggest a different outcome – one where technology and humans coexist.

We are augmenting human thinking through technology. It's not just a far-off idea; it's a potential reality where we upload bits of our cognition into non-biological intelligence – imagine extending our minds into the cloud. One day, that could be the forever afterlife.

History Behind Artificial Intelligence Singularity

Let me take you back in time just a little bit to teach you about tech history, where the origins of the technological singularity.

In the late 1950s, the renowned mathematician John von Neumann engaged in discussions with Polish scientist Stanislaw Ulam about the concept of a technological singularity.

Fast forward to 1965, mathematician and computer scientist Irving John Good delved into the possibilities, exploring the idea of an intelligence explosion in his essay "Speculations Concerning the First Ultraintelligent Machine."

The idea gained literary prominence in 1986 when science fiction writer Vernor Vinge introduced singularity in his novel "Marooned in Realtime." As we stepped into the 1990s, computer scientist Ray Kurzweil played a key role in mainstreaming the singularity with influential works like "The Age of Spiritual Machines."

This continued in 2006 with the inaugural Singularity Summit at Stanford University, where Kurzweil and entrepreneur Peter Thiel explored the nuances of the technological singularity.

The year 2008 marked the establishment of the groundbreaking Singularity University, an educational institution committed to guiding the challenges and opportunities presented by the singularity.

Zoom in to 2023, Pioneers in AI experimentation have advocated an open letter advocating for a six-month hiatus in AI research. The letter emphasizes the importance of establishing ethical guidelines and regulations to steer future developments in the field. It's connected to the OpenAI CEO issue as well. I will talk about that later in this article.

What is Superintelligence

A superintelligence is an imagined entity endowed with intelligence that greatly exceeds that of even the most brilliant and gifted human minds.

The term "superintelligence" can also describe a characteristic of problem-solving systems, like highly advanced language translators or engineering assistants, regardless of whether these advanced intellectual capabilities are manifested in agents actively interacting with the world.

Non-AI Singularity

Certain writers and authors use the term "the singularity" in a more expansive sense to denote any significant societal transformations resulting from novel technology, such as molecular nanotechnology.

However, Vinge and other writers explicitly assert that, without superintelligence, such alterations would not meet the criteria for a genuine singularity. So basically, you do not have to worry about this term for now.

OpenAI, Sam Altman & the mysterious AI system Q*?

Recently, OpenAI CEO Sam Altman found himself in a four-day exile. In simpler terms, he got fired for 4 days and was hired back as CEO. Prompting significant concern among the organization's staff.

Before his return, a group of staff researchers had penned a letter to the board of directors, raising an alarm about a potentially powerful artificial intelligence discovery that they believed threatened humanity. They were concerned about an AGI-level threat.

This revelation unfolded amid the backdrop of OpenAI's notable strides in generative AI, with over 700 employees considering joining forces with Microsoft.

Rumors in the tech world say this letter, one of the main reasons for Altman's removal by the board, highlighted concerns about commercializing advances without understanding the consequences. It was focused on an OpenAI internal project called Q (Q-Star)*.

Q*, seen by some at OpenAI as a potential breakthrough in pursuing Artificial General Intelligence (AGI), showcased promise by successfully solving certain mathematical problems. Although currently at the level of grade-school students, the achievements fueled optimism about Q*'s future success among the researchers.

The recent leadership shake-up at OpenAI revealed a fear within the board that Altman might rush to commercialize Q* without implementing sufficient safety measures. Speculation about the technical makeup of Q* has ignited discussions, with Nvidia senior AI scientist Jim Fan suggesting a multi-model approach similar to DeepMind's AlphaGo.

This development marks a step towards the next generation of AI agents, like Gemini. Basically, it means it can self-learn and improve itself like a human. That's how AlphaGo defeated the world's top Go champion.

Anyhow, Sam Altman is back on OpenAI, so now we might see Q* sooner than we think. Obviously, it was not meant for ordinary people, but still, it's a bit scary to think. Why, you may ask? Let me tell you about the future of Artificial Intelligence Singularity.

Predictions, Plausibility, and Potential Future Impacts of Singularity

We've hit the Singularity, that point where early innovations from the Industrial Revolution set the stage for the gradual dominance of markets, democracy, and technology. While there's a strong belief that markets and technology will keep on ruling, there's a shadow of doubt about the same fate for democracy.

The momentum we've gained is irreversible, with technologies like AI, autonomous cars, computers, and nuclear weapons now calling the shots. Remember Kurzweil's prediction of the Singularity? Well, it's not a future forecast anymore; the Singularity is already here.

We can't quite wrap our heads around the aftermath of this tech explosion—principles are imploding, and the future seems like a puzzle we can't solve.

Let's talk about predictions and what the future of this AI Singularity concept gonna look like.

Back in 1965, I. J. Good boldly predicted the emergence of an ultra-intelligent machine within the 20th century, setting the stage for the tech-driven future. Fast forward to 1993, when Vinge jumped into the prediction game, foreseeing intelligence surpassing humanity between 2005 and 2030.

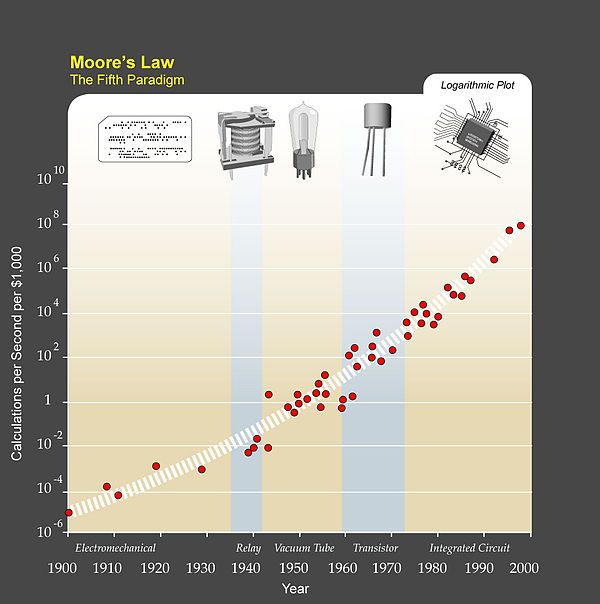

What is Moore's law in simple terms?

Moore's Law observes that the number of transistors in a computer chip doubles every two years or so.

As the number of transistors increases, so does processing power. The law also states that, as the number of transistors increases, the cost per transistor falls.

Meanwhile, in 1996, Yudkowsky stirred the pot by forecasting a singularity in 2021, when technology leaps into the unknown. Kurzweil, a familiar name in this arena, chimed in in 2005, foreseeing human-level AI around 2029 and the grand Singularity in 2045.

Not one to waver, in a 2017 interview, Kurzweil reiterated his convictions. In 1988, Moravec foresaw the computing capabilities for human-level AI in supercomputers before 2010.

In 1998, he extended his outlook a decade later, predicting human-level AI by 2040 and intelligence far beyond humans by 2050. Transitioning to 2012-2013, four polls orchestrated by Bostrom and Müller suggested a 50% confidence among AI researchers that human-level AI would emerge by 2040–2050.

Fast forward again to 2023, where Ben Goertzel threw his hat into the prediction ring, anticipating the Singularity by 2031. Beyond predictions, the technology, often compared to Moore's Law, is steering us towards a future where economic growth could potentially double at least quarterly, if not weekly, as argued by Robin Hanson.