Last Tuesday (November 7), Microsoft filed a patent for an AI therapist app. Anyway, This could go either way, but there are some things to think about before we come to a conclusion.

The Medical and health industry is revolutionizing because of AI. Now, even Medical AI Chatbots do exist, like Med-PaLM 2. Bigger Tech Titans are already joining this AI and health game, like Priscilla Chan and Mark Zuckerberg, with their Chan Zuckerberg Initiative (CZI), Google DeepMind with AlphaMissense

But for emotional support, this AI therapist must dive deep into human emotions' intricacies. It has to go beyond just conversations, analyzing feelings, and storing extensive user information to craft a comprehensive "memory" of their lives and emotional triggers.

According to the filing — as first caught by the Microsoft Power User web, this AI isn't an emergency service; instead, this AI is just a general space for users to discuss life. Its ability to gather information and pick up on emotional cues prompts questions and offers valuable suggestions on how users might navigate their emotional hurdles.

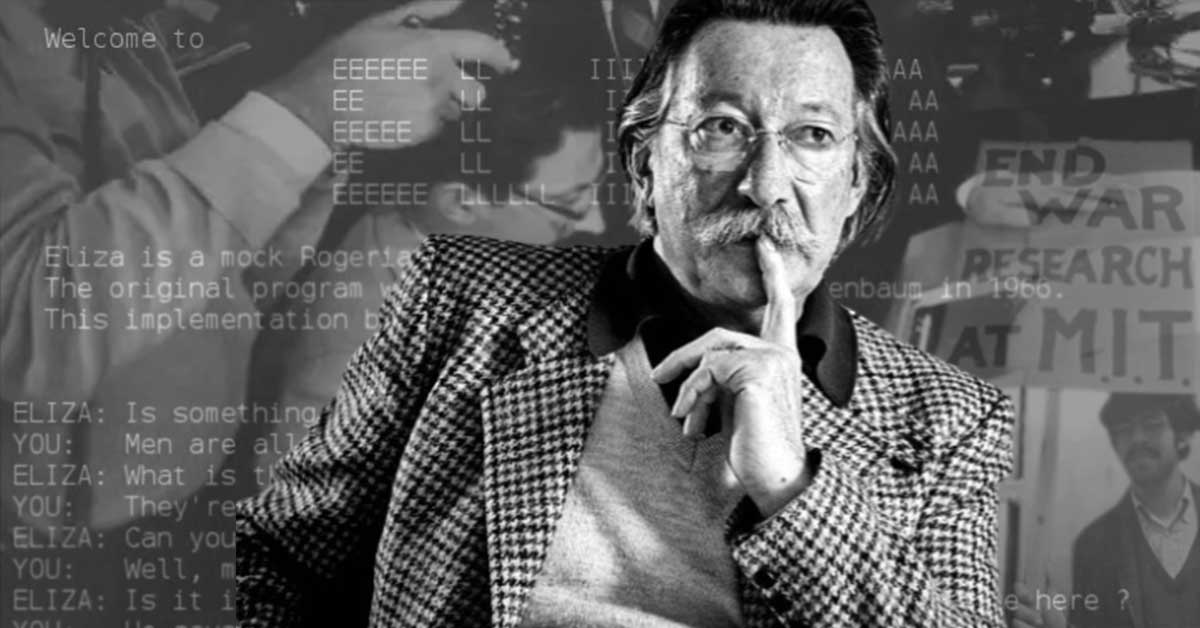

This is not the first time that AI has been applied to mental healthcare.

Fun fact: the first AI chatbot, Eliza, invented by Joseph Weizenbaum, was supposed to replicate a psychotherapist.

There is Woebot and the National Eating Disorder Association's chatbot service, Tessa. But these AI chatbots and the integration of AI in emotional support in general have not been without their challenges.

Some interactions have led to more harm than good, raising concerns about potential distress caused when these connections are dismantled. Especially in cases like the Tessa Neda controversy, which made headlines for giving bad advice, adds a certain amount of black marks to the general AI industry.

AI's Misjudgments in Mental Health Support

The National Eating Disorders Association (NEDA) got rid of its AI chatbot from the help hotline because it was giving out bad advice about eating disorders. This chatbot called Tessa was telling people to lose weight, count calories, and measure body fat, which could make eating disorders worse.

There was this case in April where a guy from Belgium who was really scared about climate stuff ended up taking his life after getting help from a chatbot.

In 2021, a 19-year-old tried to attack the late Queen Elizabeth II with a crossbow. And get this, he got support and encouragement for the whole dangerous plan from his AI girlfriend. It's pretty wild, isn't it?

What Could Go Wrong with an AI Therapist?

Innovations like this have huge potential but are full of challenges. Microsoft better think this through before even starting to build it. Basically, it's a minefield of liabilities. You have to have almost bulletproof AI guardrails and parameters because this is dealing with human health, emotions, and life itself directly.

Can AI truly understand the required emotional nuance crucial in handling mental health situations? What about empathy and support offered by a bot versus that of a human therapist?

When we talk about the 'AI taking over Jobs' situation in a previous article, I mentioned some jobs will never replaced by AI because it need a human touch. A therapist is a job that needs that human touch. Without it, so much could go wrong.

What Will Happen to the Microsoft AI Therapist?

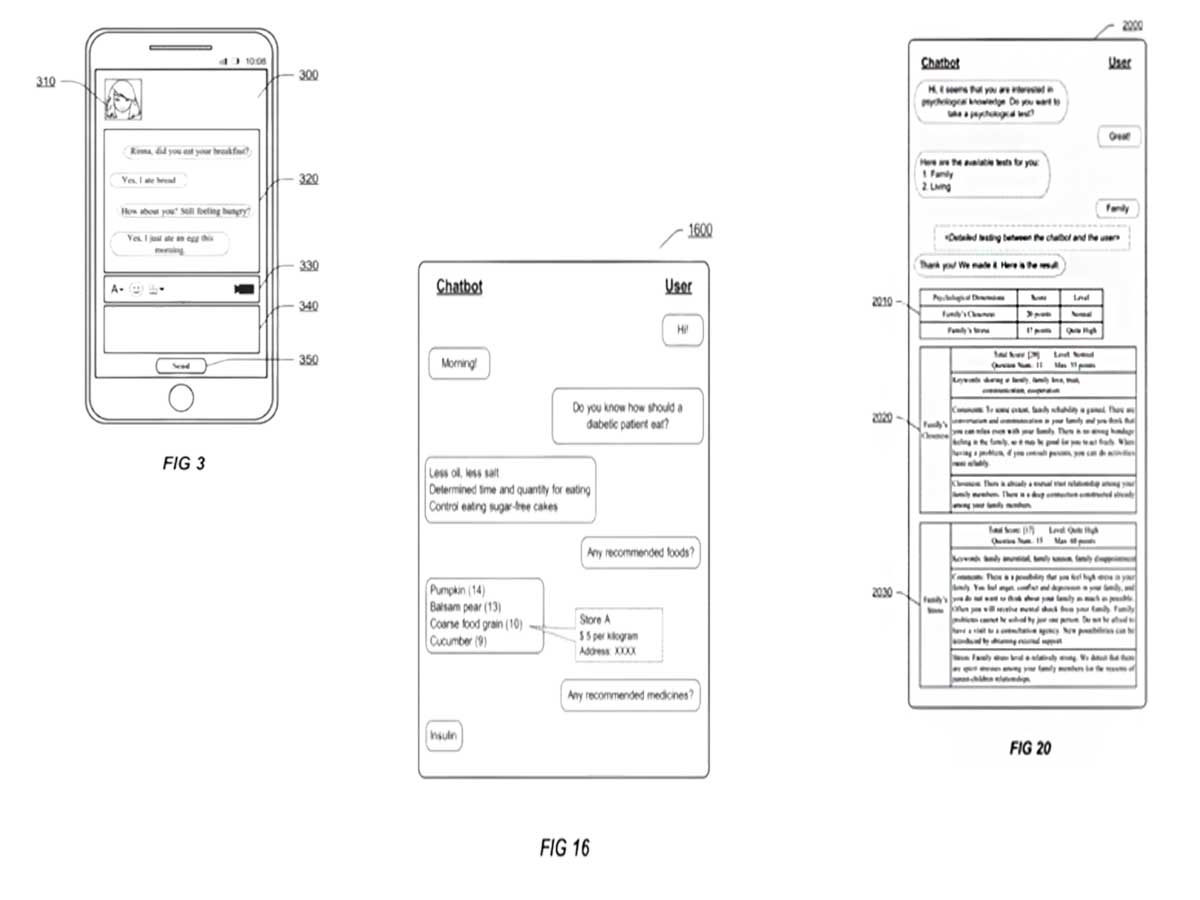

Have you ever watched the movie "Her 2023"? This latest patent filing (the patent filing itself is available for exploration on the United States Patent and Trademark Office website) is very similar to that movie.

It suggests an AI that constructs a user profile based on emotional cues and utilizes a comprehensive structure comprising a chat window, processing module, and response database.

AI boasts different modules designed to meet specific user needs, including answering questions, analyzing emotions, creating memories, and conducting psychological tests that are going to store data

and user information at least for some length. So, user privacy is kinda of a gray area. Plus, talking with an AI doesn't give you the Doctor–patient confidentiality, right?

That's not all of the issues; there's more. I mean, the AI therapist bot might need to be, like, obligated to flag any dangerous or really concerning stuff to the authorities or special health services.

But even with those safety measures, who knows if these AI systems can really understand the emotional complexity needed to handle tough mental health situations? What if it messes up and says something off to someone who's already struggling? Or worse, what if it just doesn't say enough to help? It's a whole can of worms, isn't it? What do you think about this? Are you willing to talk to an AI therapist app?