Emotion AI, also known as affective AI or affective computing, is a fascinating branch of Artificial Intelligence (AI) that focuses on analyzing human emotions and behaviors. Emotion AI uses machine learning algorithms to interpret human emotional signals in various forms, including text, audio, and video.

How does a machine understand human emotions?

Emotion AI scrutinizes, reacts to and simulates human emotions using natural language processing, sentiment analysis, voice emotion AI, and facial movement analysis.

Emotion AI doesn't discriminate; it detects and interprets human emotional signals in text, audio, video, or combinations of these. It's like having a digital psychologist who can analyze your emails, hear the tone of your voice, or even read your facial expressions through a camera.

Why does this matter? Because understanding human emotions has become a game-changer, especially in fields like marketing and finance.

The market size for emotion recognition is poised to surge by 12.9 percent by 2027. This isn't just about technological innovation; it's a seismic shift expected to reverberate through healthcare, insurance, education, and transportation.

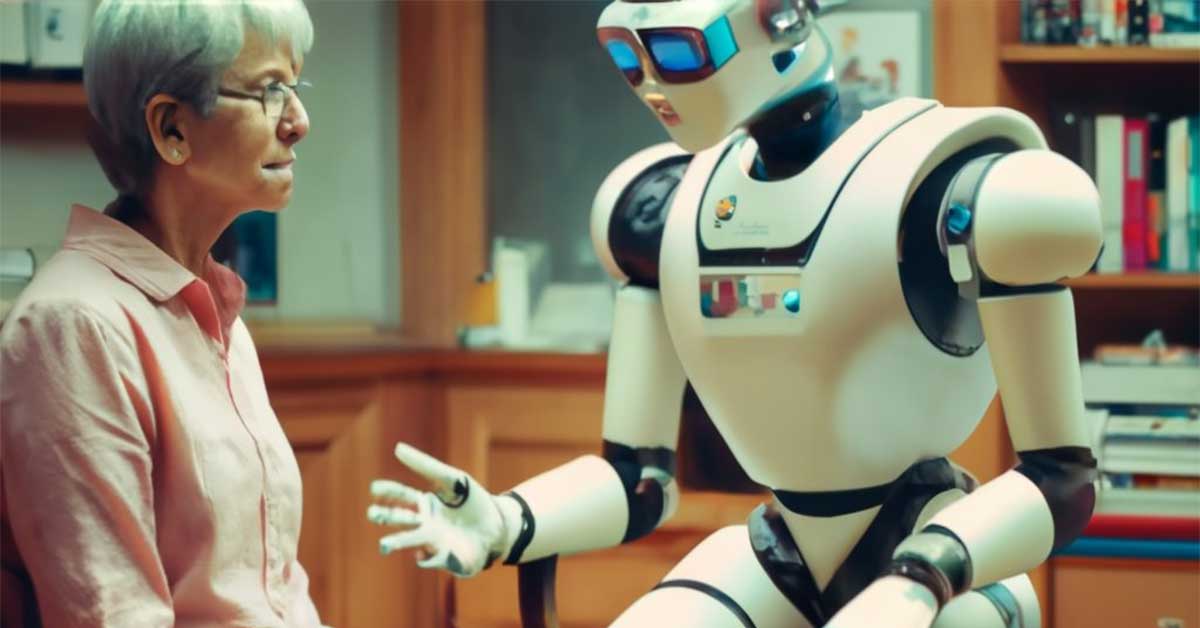

Human-machine interaction is a thing right now. To maintain this connection, analyzing large amounts of data is a must. Fueled by artificial intelligence, machines can process vast datasets in the blink of an eye.

Why does this matter? For machines to understand human emotions, they have to catch even the smallest changes in humans. Machines tuned into the cadence of voice inflections and facial micro-expressions.

Why is this all happening? The goal is simple yet profound—Improving Interactions. When machines understand our emotions, it's not just about being responsive; it's about being empathetic. It's about tailoring interactions based on how we feel.

Where Machines Connect with Humanity - Emotional AI?

In Advertising: Affect iva's Subtle Symphony

In advertising, companies like Affectiva are not just selling products but orchestrating emotional experiences. Affectiva plays a crucial role by capturing the subconscious reactions of consumers.

In Call Centers: Cogito's Voice Maestro

Picture this: You call customer service, and the voice on the other end understands your words and mood. That's the magic of Cogito's voice analytics software. Cogito's technology identifies your real-time mood in call centers, where emotions can sway conversations.

In Mental Health: A Digital Companion for Well-Being

Emotion AI isn't just about selling products; it's about enhancing our well-being. Take the CompanionMx app, for instance. In mental health, this app becomes a vigilant companion. It listens to your voice, analyzing not just the words but the very essence of your emotions. It goes beyond using wearable devices to detect signs of anxiety.

In Automotive: Navigating Emotions on the Road

While we focus on safety outside the car, what about the emotions brewing inside? Emotion AI is now finding a home in our vehicles. Picture a car that senses when you're stressed or distracted. That's the vision of researchers like Javier Hernandez at the MIT Media Lab. By monitoring drivers' emotions, vehicles equipped with Emotion AI could adjust, ensuring a safer and more pleasant driving experience.

Assistive Services: Bridging Emotion Gaps for Autism

For some, understanding and expressing emotions can be like navigating an uncharted sea. This is where Emotion AI becomes an "assistive technology." For individuals with autism, wearable monitors become silent companions, decoding subtle cues like elevated pulse rates that others might miss.

What Are The Types of Emotion AI?

We can divide Emotion AI into three main types. Text-Focused, Audio and Voice, Video and Multimodal. To understand more about how emotional AI works, let me explain these types one by one.

Text-Focused Emotion AI: NLP and Sentiment Analysis

I don't know if you noticed when you are feeling down, most of your social media will show emotional content. Have you ever thought about how your favorite social media platform knows if you're ecstatic or feeling a bit down? That's the work of Text Emotion AI.

It dives into the sea of words, using Natural Language Processing (NLP) to understand the sentiment behind the text. Whether it's a tweet, a review, or a message, this tech genius can tell if it's a joyous shout-out or a subtle sigh.

Well, it goes beyond just words and text. Sentiment analysis is the secret behind this. It's like giving the AI a crash course in emotional linguistics.

Is the sentiment positive, negative, or somewhere in between? Text Emotion AI cracks the code, helping businesses understand what people are saying and how they truly feel. Then, show you advertisements or content based on that.

Audio and Voice Emotion AI

Audio and Voice Emotion AI is also interesting. Have you ever wondered how those call center agents seem to understand your mood even before you spill the beans? Well, it's not mind-reading for sure. Yes, you guessed it, it's AI, specifically Audio and Voice Emotion AI.

Companies like Behavioral Signals and Cogito have created technology that listens not just to your words but to the tone of your voice- the pitch, pauses, and energy are all part of the symphony. This type of emotion AI digs into the honest signals beyond words, deciphering your intentions, goals, and emotions in a simple phone conversation.

However, recalibrating this AI for different languages is not an easy task. Each language has its rhythm and nuances.

Video and Multimodal Emotion AI

Video and multimodal emotion AI is where the technology leaps from words and voices to visual masterpieces of emotions. Facial expression analysis, gait analysis, eye movement tracking, and physiological signals become the building blocks.

Over the past two decades, advancements in camera technology have made this dance more robust and resilient, even in the face of noise.

But what are the uses of this type of emotional AI? Well, From decoding group responses in marketing to understanding individual behaviors in healthcare, Video, and Multimodal Emotion AI paints a vivid picture of emotions in our tech-driven world.

You have heard of Exam Proctoring using AI. AI proctoring implements basically AI-enabled systems with facial and voice recognition technology to evaluate an examinee's environment, movement, and behavior and flag suspicious activity just like a human invigilator would.

There are certain challenges and limitations of this type of emotional AI. Data Quality Dilemmas, Synchrony of Signals, Technical Complexities in Remote Work Era Technical Complexities in the Remote Work Era are a few of them.

The Impact and Ethical Landscape of Emotion AI

Emotion AI is basically our digital emotional deciphering to machines. Emotional AIs dig through human emotions, learning to measure and gauge their intricacies and, in a way, try to understand and respond.

This Artificial Intelligence tool understands not just what you say but how you feel and then tailors its responses accordingly. The concept of personalizing interventions is at the heart of this.

If you read this part of the article, you might be thinking that this could be a privacy issue, right? The answer is yes: Ethics, privacy, and the potential for harm.

There are more questions you should ask: How do we ensure the ethical use of AI? What about the privacy of our emotional states?

These aren't just hypothetical musings but genuine concerns that demand attention. The power to decipher emotions comes with responsibilities—safeguarding privacy, mitigating potential harm, and upholding ethical standards.

Pros and Cons of Emotion AI

Advantages of Emotion AI

Emotion AI is a goldmine of valuable insights. Imagine marketers, advertisers, designers, engineers, and developers who can decipher what people say and how they feel.

Emotion AI is the unsung hero behind the scenes, quietly streamlining user testing, surveys, and focus groups. No more endless debates; let the data-driven insights guide the way. Designers and developers can now fine-tune their creations based on how users feel, making products functional and emotionally resonant.

Emotional AI ensures user needs and feelings take center stage in crafting products and services. It's not just about what looks good on paper; it's about what feels right for the end-users, basically Enhancing user-centric design.

Disadvantages of Emotion AI

Emotion AI has its own challenges, like the issue of applying technology to high-consequence situations. Emotions are fuzzy, and relying solely on algorithms can be like walking on a tightrope when stakes are high.

Next on the list is the inherent limitation of not providing a complete picture of human emotions. Emotions are intricate; they are between joy and sorrow, anger and contentment. At the same time, Emotion AI might not catch every nuance. Human emotions are an intricate symphony; sometimes, the technology might miss a note or two.

And then there's the biases. For all its brilliance, the technology is not immune to biases and privacy concerns. Imagine an AI system unintentionally favoring one emotion over another, or worse, infringing on the sacred grounds of privacy.