In a recent update to its privacy policy, Google has made significant changes that have raised concerns regarding using user data for AI model training. This article delves into the key aspects of Google's updated policy, shedding light on the implications for data collection, AI model development, and the privacy of users.

We will explore the shift towards using publicly available information, including AI models like Bard and Cloud AI, and the potential consequences of this policy update. Additionally, we will discuss the broader impact of data scraping on user privacy and the legal implications surrounding these practices.

Google's privacy policy has undergone several modifications, with the recent update marking a significant milestone. The policy now explicitly grants Google the right to scrape users' online posts for AI tools, signifying a broader approach to data collection and usage.

This amendment expands the company's data acquisition beyond its services and encompasses data sourced from the entire public web. Consequently, this move raises questions about the extent to which Google can access and harness user-generated content for AI development.

The updated privacy policy underscores Google's emphasis on publicly available information to improve its services and develop new products. In the realm of AI, this entails training AI models with data gleaned from various sources across the web.

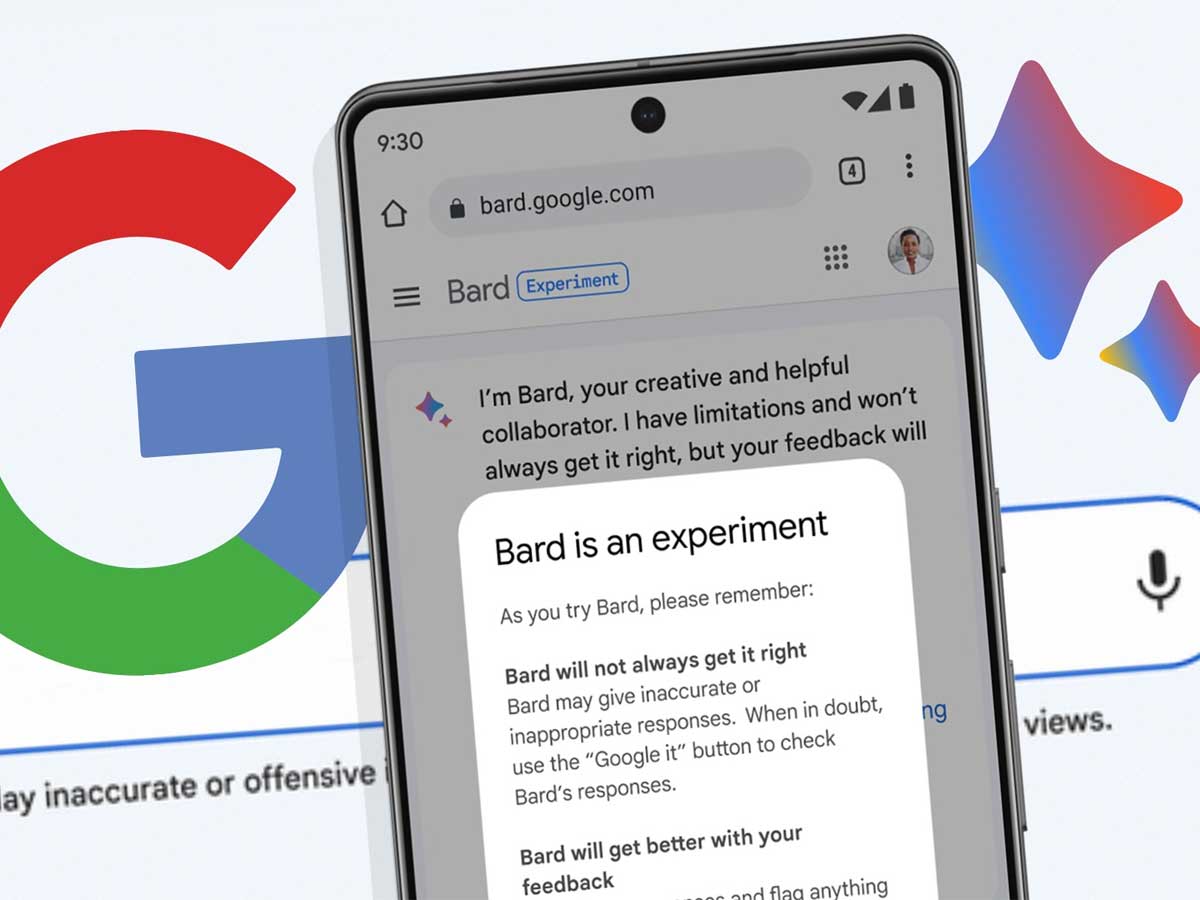

Publicly accessible online information and data obtained from other public sources serve as fodder for enhancing Google's AI capabilities. This approach enables the company to refine AI models like Google Translate, Bard, and Cloud AI, thereby delivering improved product and user features.

While it is commonly understood that public posts are visible to others, the updated policy introduces a new paradigm regarding the potential uses of publicly shared information. The implications extend beyond mere visibility, as AI models like Bard and ChatGPT can now utilize data posted online.

This raises privacy concerns as users' long-forgotten blog posts or reviews from years ago may resurface in unpredictable and challenging ways. The opaque nature of AI processing further compounds the difficulty in anticipating how users' words may be repurposed.

With the advent of AI models like ChatGPT, sourcing data has become an intricate matter. Companies such as Google and OpenAI resort to scraping vast portions of the internet to fuel the training of chatbots and other AI applications.

However, the legality of this data-scraping practice remains a subject of debate. In the years to come, courts will likely grapple with copyright questions that were once confined to science fiction. Meanwhile, consumers are already experiencing the unforeseen consequences of data scraping, indicating the urgency to address legal and ethical dimensions surrounding these practices.

Its recent privacy policy update shows Google's deepening commitment to AI. The company has reinforced its dedication to utilizing data for AI model training and development. As of July 1, 2023, Google has rolled out a new privacy policy that reflects these aspirations.

The policy amendment empowers Google to leverage user data not only for traditional "business purposes" but also to train its AI models and advance the capabilities of products like Google Translate, Bard, and Cloud AI. This policy revision aligns with Google's vision of leveraging user data to deliver enhanced services and innovative features.

While scraping data from the web raises privacy concerns, the implications go beyond individual user privacy, extending to the legal and ethical dimensions of data sourcing. As the post-ChatGPT era unfolds, balancing technological advancements and safeguarding user privacy becomes crucial, prompting further exploration of legal frameworks and ethical guidelines.

Sources: gizmodo.com / policies.google.com