Twitter, the popular social media giant, faces a significant legal challenge that could mark a turning point in establishing new standards for scrutinizing and combating online antisemitism. This landmark case comes after the platform failed to remove a series of hate-filled tweets reported by users.

The tweets in question, which included antisemitic and racist content, were brought to Twitter's attention by HateAid, a German organization advocating for human rights in the digital space, and the European Union of Jewish Students (EUJS). Despite the tweets violating Twitter's moderation policy, the company did not take action to remove them from its platform.

HateAid and EUJS reported six tweets to Twitter in January, highlighting their antisemitic nature or racist content. Shockingly, four of these tweets explicitly denied the Holocaust, while another tweet advocated for gassing blacks and sending them to Mars via SpaceX.

The sixth tweet compared Covid vaccination programs to mass extermination in Nazi death camps. Despite being reported, Twitter claimed three tweets did not violate its guidelines and failed to respond to the other reports.

In response to Twitter's inaction, HateAid and EUJS took legal action. Earlier this year, they applied to a Berlin court to have the offensive tweets deleted. They argued that the tweets violated German law and highlighted Twitter's failure to fulfill its contractual obligations to provide a secure and safe environment for its users. The legal action seeks to hold Twitter accountable and establish stronger measures for combatting hate speech on the platform.

The surge in antisemitic online content has been a cause for concern, particularly during the Covid-19 pandemic. Studies have repeatedly shown a significant increase in online hate, with the Anti-Defamation League reporting the highest number of antisemitic incidents in the United States since its records began in 1979.

The Community Security Trust in the UK also recorded the fifth-highest total since 1984, while Germany's interior ministry recorded highs in antisemitic crimes in recent years.

Experts specializing in extremist violence have emphasized the undeniable link between online hate and physical attacks on targeted minority groups. Hateful and conspiratorial online ecosystems play a crucial role in the radicalization of individuals towards antisemitic worldviews.

Furthermore, these platforms contribute to the mass proliferation of narratives that unjustly hold Jews responsible for society's challenges. Online abuse and harassment can easily translate into offline violence.

Accusations of Twitter's failure to address online hate have intensified since Elon Musk took over the company in October. Musk, who describes himself as a "free speech absolutist," made controversial decisions such as restoring the accounts of thousands of users previously banned from the platform.

This included white supremacists with a history of involvement in neo-Nazi propaganda. Additionally, Musk dissolved Twitter's independent Trust and Safety Council, responsible for advising on tackling harmful activity, and significantly reduced the content moderation staff, leading to resignations within the company.

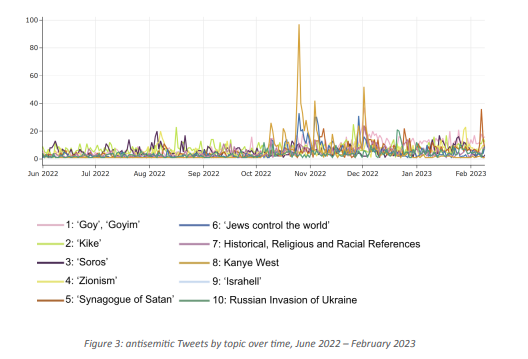

Research by the Institute for Strategic Dialogue (ISD) indicates a substantial increase in antisemitic posts on Twitter following Elon Musk's acquisition. The volume of English-language antisemitic tweets more than doubled in the nine months between June 2022 and February 2023.

The creation of antisemitic accounts also tripled during the same period. These alarming statistics point to a worsening situation on the platform.

Elon Musk further added fuel to the fire with his controversial remarks. In May, he described Jewish philanthropist George Soros, a frequent target of online abuse, as wanting to "erode the very fabric of civilization." This statement perpetuated historical antisemitic tropes.

The legal action initiated by HateAid and EUJS aims to make Twitter more responsible for the content on its platform. They argue that freedom of expression does not merely mean the absence of censorship but also requires Twitter to ensure a safe space for users, free from attacks, death threats, and Holocaust denial.

Unfortunately, Twitter has not met these expectations, making it neither secure nor safe, particularly for Jewish individuals.

They were not promptly removing reported tweets, allowing accounts like @Royston1983 to continue spreading antisemitic content. This account posted at least 20 more tweets containing antisemitic material, including Holocaust denial and conspiracy theories about Jewish control of various institutions.

Other accounts, such as @Abdulla74515475 and @Cologne1312, also remained active despite posting antisemitic tweets that went unaddressed by Twitter.

Twitter's moderation efforts have faced criticism for allowing hate speech to persist on the platform. A report by the UK-based Centre for Countering Digital Hate (CCDH) revealed that Twitter failed to act on 99% of hate posted by subscribers to Twitter Blue, a paid-for service for accounts of public interest.

The platform's inconsistent enforcement of its policies enabled blue tick subscribers to break moderation guidelines without consequences. Racist, homophobic, neo-Nazi, antisemitic, and conspiracy content remained unchecked.

Social media platforms like Twitter have become breeding grounds for antisemitism, racism, and xenophobia, affecting the safety of marginalized minority communities. Reports from CCDH and the Anti-Defamation League have indicated a significant increase in racist content on Twitter following Musk's takeover. The platform's role in amplifying hate speech cannot be ignored.

Sources: theguardian.com / hateaid.org / isdglobal.org