The Israel Defense Forces (IDF) have embarked on a cutting-edge endeavor, integrating artificial intelligence (AI) into their military operations. In the face of escalating tensions in occupied territories and with arch-rival Iran, the IDF has turned to AI to select targets for air strikes and streamline wartime logistics.

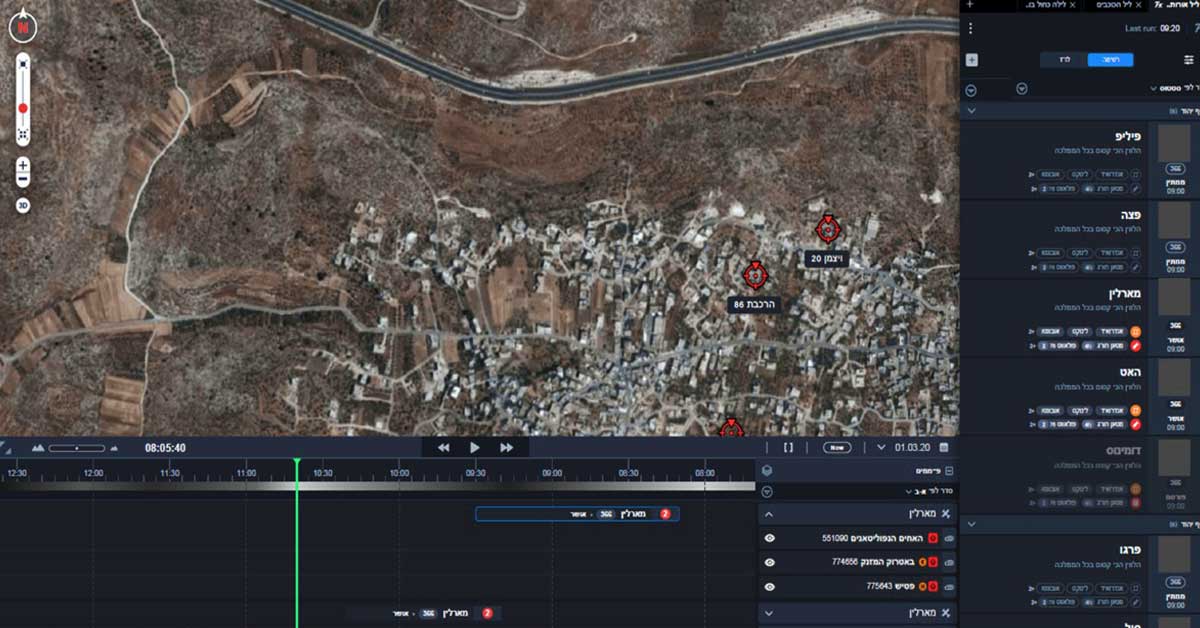

With the adoption of an AI recommendation system, the IDF can now sift through enormous volumes of data to pinpoint targets for air strikes. The AI analyzes various factors and assists military officials in making crucial decisions.

To further expedite the process, the IDF employs the Fire Factory AI model. This system calculates munition loads, assigns targets to aircraft and drones, and proposes a well-organized schedule for ensuing raids. The integration of AI has revolutionized target selection, making the IDF more agile and responsive in conflict situations.

While AI has proven to be a powerful tool in warfare, it also raises important questions about accountability and regulation. Although human operators oversee and approve the selected targets and air raid plans, the technology itself remains largely unregulated at the international and state levels.

This lack of oversight has sparked concerns, as mistakes made by AI could have dire consequences. The absence of established frameworks for AI in warfare leaves room for potential ethical dilemmas and unintended escalations.

The IDF's operational use of AI remains shrouded in secrecy, with many details classified. However, hints from military officials indicate that AI has been employed effectively in conflict zones such as Gaza, Syria, and Lebanon.

In these regions, the IDF frequently faces rocket attacks, and AI has enabled rapid and precise responses to these threats. Additionally, AI is utilized to target weapons shipments to Iran-backed militias in Syria and Lebanon, showcasing the IDF's growing prowess in AI warfare.

The IDF's ambition extends beyond just integrating AI into a few operations. Instead, they envision becoming a global leader in autonomous weaponry. Various AI systems are utilized to interpret diverse data sources, including drone and CCTV footage, satellite imagery, electronic signals, and online communications.

The Data Science and Artificial Intelligence Center, run by the army's 8200 unit, plays a pivotal role in developing these advanced military AI systems. As with any groundbreaking technology, AI in the military has its fair share of critics and challenges.

Worries center around the secrecy surrounding AI development, which can lead to unforeseen rapid advancements in AI technology, potentially crossing the line into fully autonomous killing machines—the lack of transparency in algorithmic decision-making further fuels concerns about AI's ethical implications.

The integration of AI in warfare raises serious ethical questions. One major concern is the potential for civilian casualties and accidents caused by AI systems. Unlike human soldiers, AI does not possess moral reasoning and may inadvertently target non-combatants.

The accuracy and precision of AI systems, especially when trained on data involving human lives, are also subject to scrutiny.

Proponents of AI integration argue that when used correctly, AI can significantly reduce civilian casualties and improve battlefield effectiveness. By rapidly processing vast amounts of data, AI can aid decision-making in the heat of battle.

However, it is crucial to recognize the inherent risks and complexities associated with AI deployment in warfare.

Israel's leaders envision the country as an AI superpower, a status that comes with considerable influence in the global technological landscape. Although specific details and investments in AI development remain undisclosed, it is evident that Israel is at the forefront of AI innovation in the military domain.

The absence of international frameworks for AI in warfare is a pressing issue. Given the potential consequences of AI-related incidents and civilian casualties, there is a growing need to establish responsible practices and guidelines for the use of AI in the military.

In light of the complexities and uncertainties surrounding AI, some argue that AI should be reserved exclusively for defensive purposes. Certain decisions in warfare require human judgment, taking into account values and compliance with international law. Striking a delicate balance between AI's potential benefits and ethical implications is of paramount importance.

Sources: bloomberg.com / reuters.com / timesofisrael.com