In a quiet town in sunny southern Spain, something unthinkable happened. AI-generated naked images of young local girls started popping up on social media, and these girls had no idea it was happening. Imagine that – your private pictures splashed across the internet without your knowledge. How did this nightmare become a reality?

It all started innocently enough. Some photos of these girls, fully clothed, were taken from their social media profiles. Then, they went through a creepy app that can make anyone look like they're not wearing any clothes at all. This scandal unfolded in a place called Almendralejo, a town tucked away in the southwestern province of Badajoz known for its olives and red wine production.

The Almendralejo Shocked - AI Nudes

As news of this horrifying revelation emerged, parents like María Blanco Rayo, a mother of a 14-year-old girl, were thrust into an unexpected nightmare. "One day my daughter came out of school and she said 'Mum there are photos circulating of me topless'," recounts María. The shockwave extended to form a support group of parents—the parents of 28 girls affected—bonded by their shared anguish.

The Investigation Unveiled the Perpetrators

Local authorities launched a full-fledged police investigation to unmask the culprits behind this heinous act. Shockingly, at least 11 local boys were identified as having involvement in either creating these scandalous images or disseminating them via messaging apps like WhatsApp and Telegram.

To make matters even more sinister, investigators are exploring the claim that an extortion attempt was made using a fake image of one of the victims. The emotional toll on these girls varies, with some unable to even leave their homes due to the trauma inflicted upon them.

Almendralejo's Unwanted Spotlight - AI Fake Nudes

Almendralejo, with its population of just over 30,000, had always been known for its peaceful existence. However, the unwelcome attention this case has attracted has made it national headline news.

This unexpected prominence is largely due to the relentless efforts of Miriam Al Adib, a gynecologist with a significant social media presence. She transformed her platform to place this disturbing issue at the center of Spanish public discourse.

Miriam Al Adib's reassurance to the affected girls and their parents was a beacon of hope in these dark times. "It's not your fault," she emphasized, encouraging victims not to hide but to seek justice and support.

The Legal Quagmire - Concerns Amongst Locals

The suspects in this case, shockingly, are aged between 12 and 14. Spanish law does not specifically cover the generation of images of a sexual nature when it involves adults, although the creation of such material using minors could be deemed child pornography. Another possible charge could be for breaching privacy laws. In Spain, minors can only face criminal charges from the age of 14 upwards.

This case has sent shockwaves even among those not directly involved. Concerned local residents like Gema Lorenzo and Francisco Javier Guerra are grappling with the implications.

"Those of us who have kids are very worried," says Gema Lorenzo, a mother with both a son and a daughter. Francisco Javier Guerra, a local painter and decorator, points a finger at the parents of the boys involved, suggesting that preventive actions could have averted this tragedy.

The Global Deepfake Epidemic & Rising Popularity of Deepfake Apps

This shocking incident is not isolated but reflects a growing global menace. Earlier this year, AI-generated topless images of the singer Rosalía made headlines on social media.

Women from different parts of the world have fallen victim to this disturbing trend. "Right now this is happening across the world. The only difference is that in Almendralejo we have made a fuss about it," remarks Miriam Al Adib.

Apps like those used in Almendralejo are becoming increasingly commonplace. Javier Izquierdo, the head of children's protection in the national police's cybercrime unit, highlights the alarming accessibility of such technology, even for minors. A staggering 96% of deepfake videos online are non-consensual pornography, primarily targeting women, according to research by independent analyst Genevieve Oh.

Perhaps most concerning is the ease with which these disturbing images can be accessed.

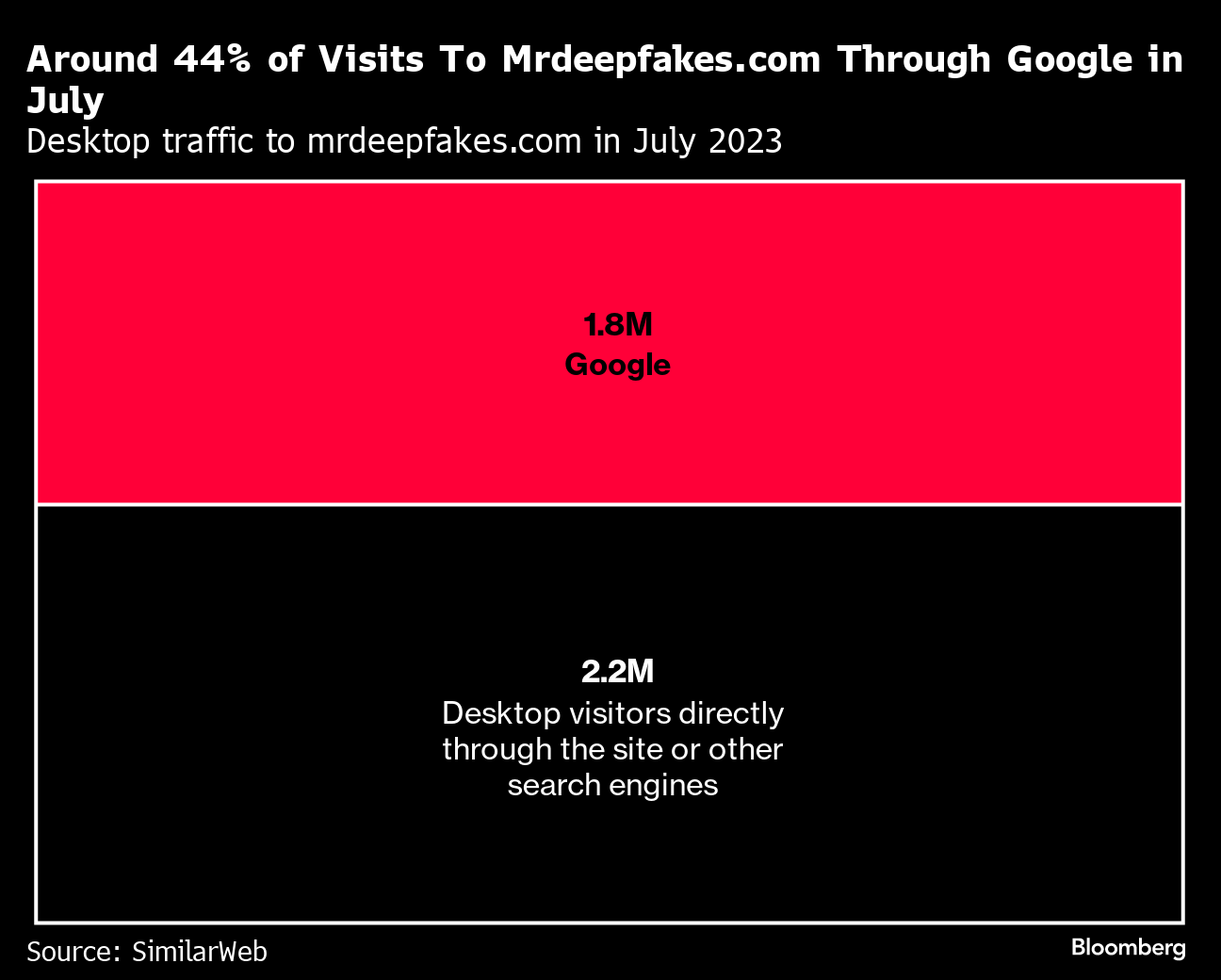

A simple Google Search alongside the word "deepfake" can lead anyone to a plethora of deepfake websites.

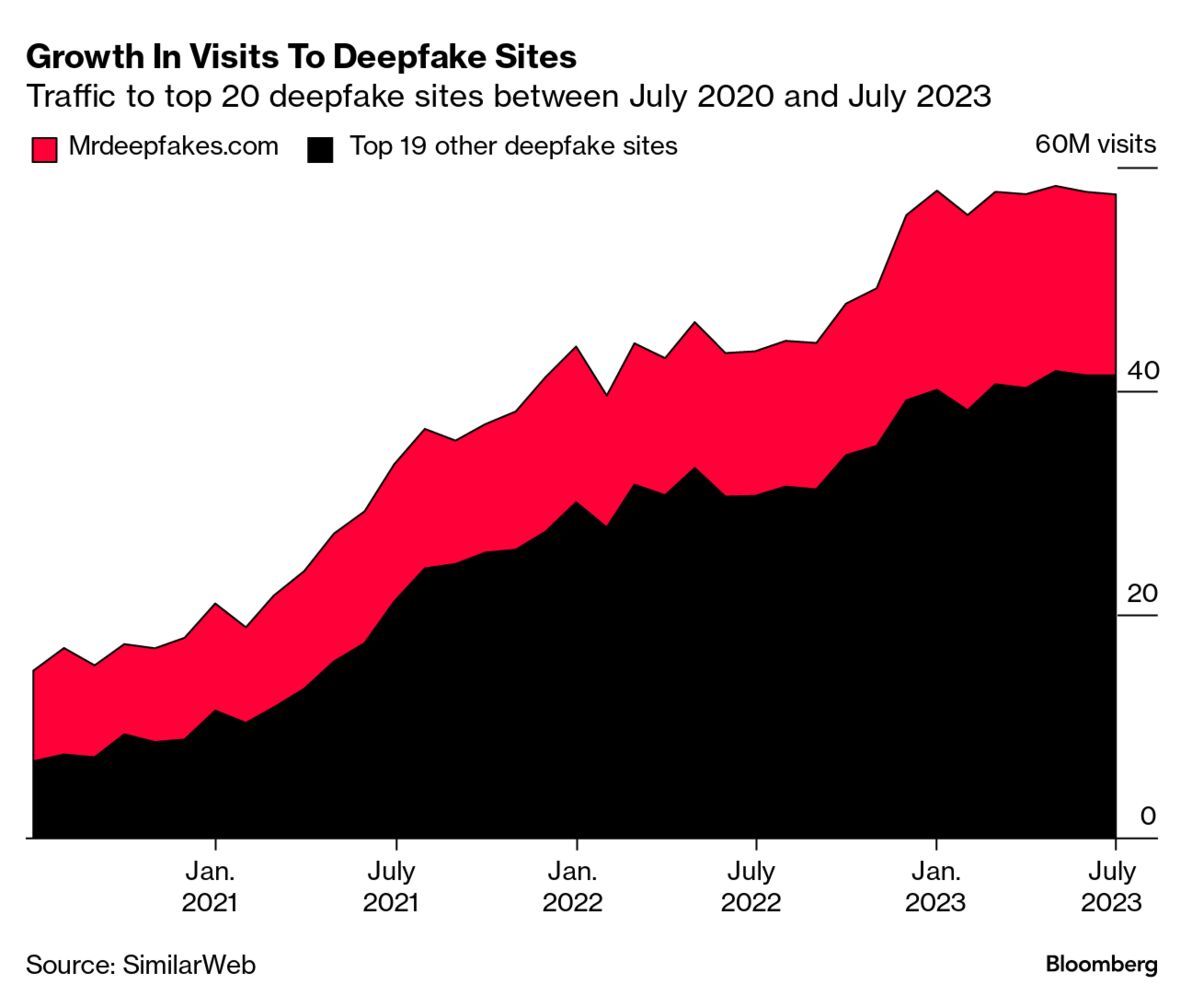

Shockingly, between July 2020 and July 2023, monthly traffic to the top 20 deepfake sites increased by a staggering 285%, with Google being the single largest driver of traffic. Mrdeepfakes.com alone received 25.2 million visits in total.

How to Fight AI-Generated Nude Photos - StopNCII

In 2021, a powerful alliance was formed when Meta joined forces with 50 global NGOs to assist the UK Revenge Porn Helpline in introducing a game-changer known as StopNCII.org. This superhero of a website takes on the shady world of online non-consensual sharing of private images, offering a lifeline to individuals concerned about their personal privacy.

How does StopNCII work

Well, it deploys some nifty tricks like on-device hashing for safety and privacy. This means it assigns a unique numerical code to images, creating an unbreakable seal of security.

Tech companies in cahoots with StopNCII.org use these special hashes to spot any sneaky image sharing on their platforms. The best part? Your original images stay put right on your device, ensuring you're in control. No more unauthorized distribution of your sensitive content.

But what if you're under 18? Don't fret; there are resources like the National Center for Missing & Exploited Children (NCMEC) ready to lend a helping hand. This is a true game-changer in the battle against the dark side of the internet.