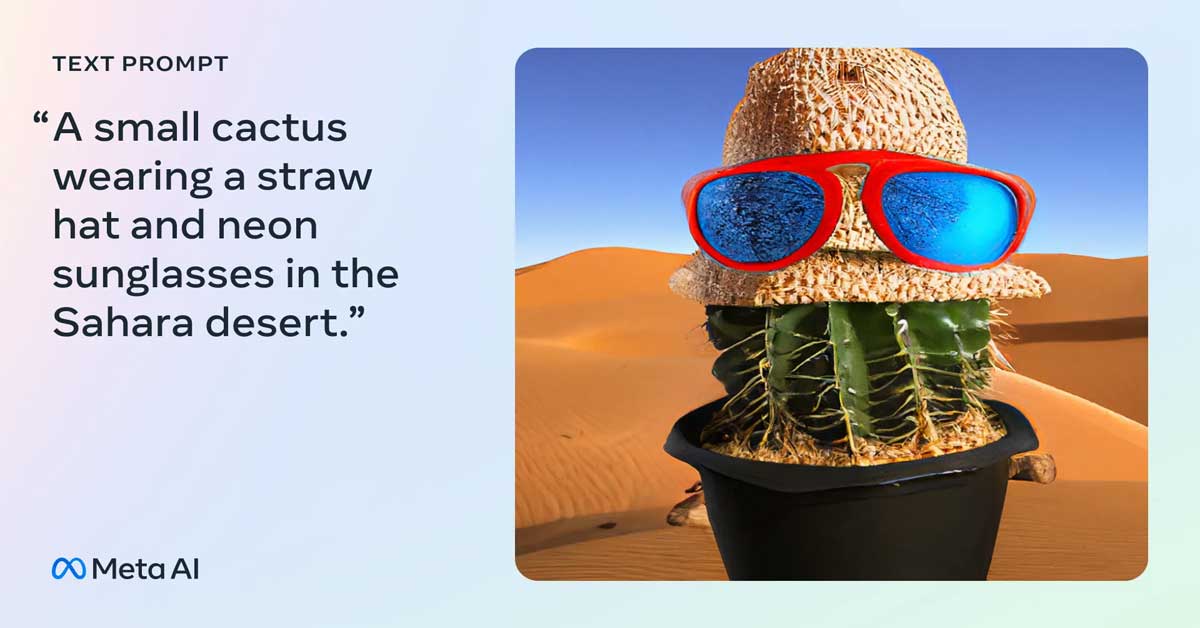

In the ever-evolving field of generative AI models, Meta, a prominent player, continues to push boundaries with its latest research endeavor: CM3leon. Pronounced like "chameleon," CM3leon represents a multimodal foundation model that aims to revolutionize text-to-image and image-to-text creation.

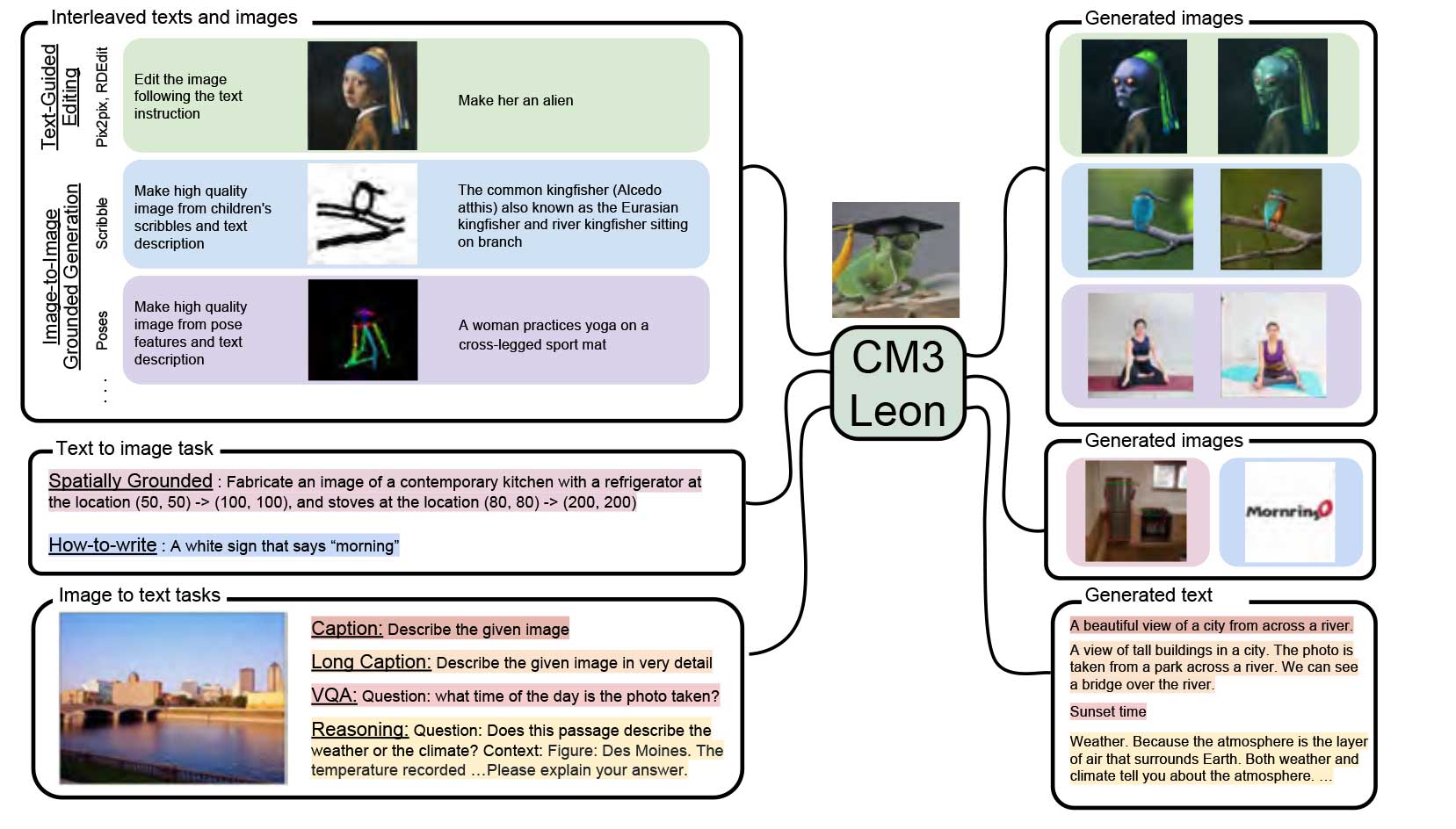

By harnessing its capabilities, CM3leon enables the automatic generation of captions for images, opening up new possibilities for creative expression and practical applications.

While the concept of AI-generated images is not novel, with tools like Stable Diffusion, DALL-E, and Midjourney already enjoying popularity, Meta sets CM3leon apart through its unique techniques and claimed performance. What distinguishes CM3leon from its predecessors lies in its approach to building the model and the remarkable results it delivers.

Traditionally, image generation has been dominated by diffusion models, as evidenced by the prevalence of tools like Stable Diffusion. However, CM3leon adopts a different methodology by utilizing a token-based autoregressive model.

This model offers distinct advantages, such as superior global image coherence. Nevertheless, it comes at the cost of higher training and inference expenses, making CM3leon's achievement even more impressive.

Meta researchers have demonstrated CM3leon's exceptional performance in the realm of text-to-image generation. Remarkably, CM3leon achieves this state-of-the-art performance while utilizing significantly less compute power compared to previous transformer-based methods.

This efficiency paves the way for enhanced productivity and cost-effectiveness, further solidifying CM3leon's position as a groundbreaking innovation.

Ethics is a paramount consideration when working with image data in the domain of text-to-image generation. Meta's conscientious approach to image training sets CM3leon apart.

To address concerns related to image ownership and attribution, Meta exclusively sources licensed images from Shutterstock. This approach ensures a legal and ethical foundation without compromising performance.

Similar to OpenAI's approach with ChatGPT, the CM3leon model undergoes a supervised fine-tuning (SFT) stage. This stage optimizes resource utilization and image quality, resulting in highly refined outputs.

By training the model to comprehend complex prompts, Meta leverages the power of SFT to enhance CM3leon's performance across various generative tasks.

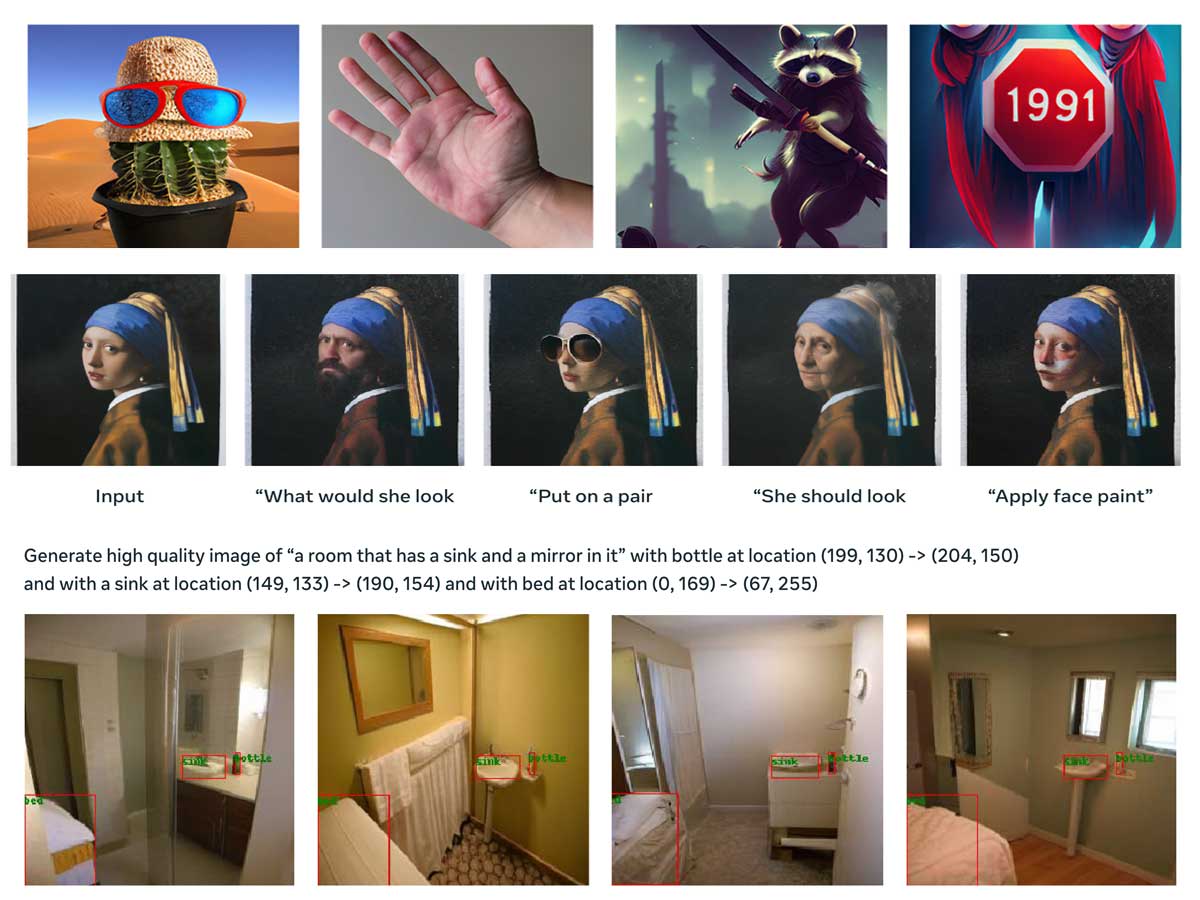

Instruction tuning plays a pivotal role in unlocking the full potential of multimodal models. As highlighted in Meta's research paper, this technique significantly amplifies CM3leon's performance in image caption generation, visual question answering, text-based editing, and conditional image generation. The versatility and adaptability of CM3leon make it a valuable tool for diverse applications.

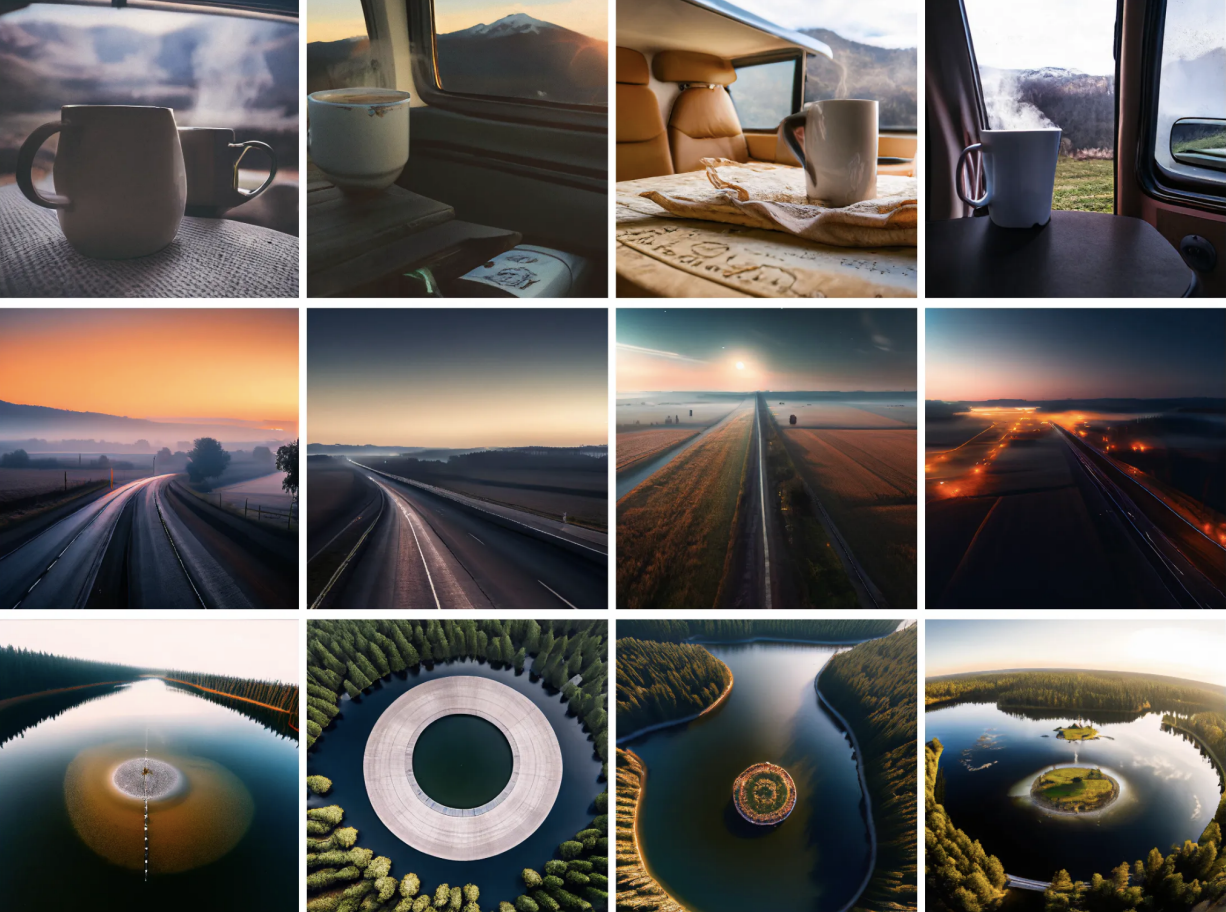

Meta has shared sets of sample images generated by CM3leon, providing a tangible demonstration of its capabilities. These images exhibit CM3leon's capacity to comprehend complex, multi-stage prompts, resulting in the production of high-resolution visuals. The impressive quality and clarity of these images signify CM3leon's potential to push the boundaries of generative AI.

Currently, CM3leon remains a research effort, leaving uncertainty regarding its public availability on Meta's platforms. However, given its power, efficiency, and promising results, it seems highly likely that CM3leon and its innovative approach to generative AI will transcend the realm of research.

This technology holds the potential to reshape various industries, from creative content generation to practical applications that leverage the seamless interaction between text and images.

Sources: ai.meta.com